Mon, Aug 8, 2016

Hey, it’s been a while since I posted here. My spare time has been taken up with my business, and with holidays - I spent a month traveling around the UK and France and following the Tour de France.

So this is big catchup time - some of these links may be a few months old but are still relevant.

Serverless corner:

What the hell is serverless?

Randomly terminate instances in an autoscale group using Chaos Lambda.

How to manage apt repos in S3 using Lambda to rebuild the catalog.

Securing serverless apps

Serverless NYC - a personal report. There are Serverless conferences running in London and Tokyo later this year. Sydney soon?

Smallwins have published some authoring tools for Lambda

Not-serverless:

A neat hack to manage SSH access with IAM.

Lessons learnt implementing an IoT Coffeebot. I used an AWS IoT button to control my Sonos system and can sympathise with the ‘don’t expect instant feedback’ comment.

More lessons learnt, this time from a year of running elasticsearch in production, some useful tips.

A really interesting look at the question of whether Apple should build their own cloud. Of course the answer is ‘yes’ :)

CloudNative introduced Yeobot, a Slack bot that allow you to query your AWS resources. Peter Sankauskas talked more about this and the plans for the future in episode 4 of Engineers & Coffee, which is well worth a listen.

Getting started with Kinesis streams - this is a great walkthrough including sample code for dashboards.

Cloudcraft have announced their pricing. I’m on the fence about this. The diagrams look pretty and the in-built pricing calculator is a great feature. Import is useful but is likely to choke on larger environments (or I expect the visualisation will struggle - every other tool I have tried in enterprise sized deployments has done this). I’m not convinced that 3D perspective diagrams are any clearer than flat ones - to me they are less clear. The pricing is high - I use Lucidchart and their Team offering is US$20/month for 5 users. For Cloudcraft, that’s US$245/month.

An excellent guide to using Postgres for pros and beginners.

Fn::Include for Clouformation templates

A walkthrough on how to encrypt ephemeral EC2 volumes using KMS.

All the cool Big Data kids love R. Here’s how to build an R cluster using cfncluster.

Sample Cloudformation templates for deploying S3 static sites, NAT gateways, and security policies.

The Segment Stack - build your own Heroku with AWS, Docker and Terraform.

Nulab migrated to Aurora with no service interruption.

CapitalOne presented in the keynote at re:Invent last year and have released their security product, Cloud Custodian. Looks a lot like Config Rules.

Site Reliability Engineering at Google - excellent preso.

It’s an article focussed on the game ‘The Division’ but applies to other (all?) areas of tech: never trust the client.

A really interesting look at whether AWS will release their IaaS for use in private data centres. The bottom line is - if it will make money, they will do it.

I don’t know enough about Google Cloud in comparison with AWS so this guide to Google Cloud Platform for AWS Professionals is relevant to my interests..

Some stats and info from AWS on the tech behind Prime Day.

À plus tard!

Mon, May 2, 2016

Last week saw Sydney’s turn for the AWS Summit roadshow. With the Sydney Exhibition & Conference Centre still being rebuilt, it was back out to the Hordern Pavilion for a second year but unlike last year the weather was clear and the Summit was spread over two days - much more room, much more comfortable.

The Summit tries to be something for everyone. One statistic mentioned in the first day keynote was that in the survey sent to Summit registrees, 45% had deployed production workloads in AWS. In the bubble I am in - working with AWS services every day, speaking

at AWS meetups, following AWS blogs, speaking to other AWS architects - it’s easy to forget that there are plenty of companies out there just starting their cloud migrations (not ‘journeys’ - please, no more ‘cloud journeys’).

There was an expectation that Sydney availability for the two key AWS serverless components - API Gateway and Lambda - would be the main keynote announcement on Wednesday, with either immediate availability or at least a very near launch date. After all, Lambda has been available in other regions for over a year now.

And sure enough it was the big news of the day - but not in a good way. The reaction to the announcement that

Lambda would be available ‘in the next few months’ did not set the crowd on fire. There weren’t any boos, but it felt pretty close to that. Beware of angry nerds! I’ve been keen on pushing out serverless architectures since boarding the bandwagon at re:Invent 2015 and we had a project designed and ready to go - the Lambda components will now have to be replaced with NodeJS on EC2 as a stopgap.

AWS do need to be careful that they don’t start to get a reputation for vapourware - or at least slow deployments. Lambda has rolled out slowly globally, and it’s looking like EFS won’t make it to release anywhere in 2016 despite being announced over a year ago (at the San Francisco Summit in April 2015).

The lack of Lambda was highlighted again in the best session I attended on day one, on Lambda and serverless architectures. The session was hosted by Ajay Nair (the AWS PM for Lambda) and included a customer story from Sam Kroonenburg from A Cloud Guru, the AWS video training people I’ve mentioned here before, who run their entire platform serverless. Some great stats were shared by Sam included them having 50k+ users and that they can supply a course - videos, tests, comments etc - for just $0.12 each. There was an apologetic ‘coming soon - sorry’ for Lambda in Sydney from Ajay. He did look genuinely disappointed - hopefully it is close.

Lambda was a big part of the second day keynote demo too, with Glenn Gore saying that his IoT/Echo demo (Echo (Alexa) Skills are Lambda backed) was not too latency crippled despite being hosted in the US.

Outside of the Lambda disappointment there were a decent mix of sessions for all levels - plenty of 101 and 201 ‘start here’ guides, along with more meaty 301 and 401 tracks for the old hands, including Evan Crawford (AWS) and Michael Fuller from Atlassian presenting an updated version of their cost control session, with some interesting ideas on rightsizing EC2 instances.

In between sessions there were plenty of vendor booths to check out on the Expo floor - you can see the full list of sponsors here but these ones stood out for me:

SignalFx were one of the many many many sponsors in the monitoring space - but are differentiating themselves by concentrating more on the infrastructure side instead of the application side. Think Nagios or Icinga, not New Relic.

Speaking of them, of course New Relic and Datadog were both there - Datadog’s event correlation is cool (overlay a feed from your CI/CD pipeline over your metrics - oh look, memory usage went up after that release went live..). I would be surprised if New Relic didn’t release something similar very soon, but if I were choosing between the two right now I think Datadog would just edge it.

Rackspace and Bulletproof were both keen to manage your cloud for you. I know people working at both and know both companies do an excellent job, if you’re happy to pay the surcharge. Maybe its cheaper than running your own Ops department?

Cloudability, CloudCheckr and CloudMGR all want to help with your cloud cost and security needs. CloudMGR have an interesting product that basically builds on top of CloudCheckr - CloudCheckr reports, CloudMGR can fix reported issues - buy RIs, close security groups etc.

Dome9 recently launched an IAM protection product to complement their security group protection product. Both apply rules on top of the base AWS product, to enhance and further secure them - e.g. the security group product checks and reverts unauthorised security group changes. Both products could do with clearer naming.

ExtraHop injects itself into your AWS infrastructure for agentless monitoring at the network level.

The vendor hall was also a great place to catch up with ex-colleagues, meet up with regular contacts (good again to see Darrell and Gary from PolarSeven, and Chris from GorillaStack - best 3 out of 5 in the banana throwing game?), meet people IRL that I knew only from LinkedIn, Twitter or Slack (hi Andre and Adam from CMD Solutions), and talk to others in the cloud industry.

With the certification lounge serving decent coffee and the opportunities to network with fellow cloud nerds - this was the best Summit yet - bring on re:Invent 2016.

Fri, Apr 29, 2016

A while ago I posted about the AWS IoT button that I picked up at re:Invent in 2015. You can read that post in full but in short I wanted to use the button to turn off my Sonos system when I left the house, but could not get it to work reliably - multiple invocations were firing off, as if the button press was registering multiple times, so the Sonos system would turn off and on again.

With help from Harry Eakins from Lab126 (the Amazon division handling the IoT button, Dash buttons etc) I did get to the root cause of the issue but forgot to update my post until I was reminded by Jinesh Varia, the Head of Mobile and IoT Developer Programs at AWS.

The issue boiled down to two things.

- A fault in my code.

- The behaviour of Lambda when errors occur.

I’ll deal with these in reverse order.

When Lambda calls fail they can, under some circumstances, retry. I wasn’t aware of this - if I had been aware it would have been an easier diagnosis. I did Google for retry behaviour when debugging this and could only find references to retrying when handling DynamoDB and Kinesis streams - which this function does not use. Even searching now I’m struggling to find it but thanks to Harry for pointing me to the definitive source here.

In my code - I was not explicitly calling context.succeed() or context.fail() to terminate my function. I didn’t dig into it in depth but it looks like not exiting the function via one of those methods means Lambda considers the request to have failed, and so it retries. I updated my code to exit cleanly and it worked. I’ve updated my Gist to include those context. methods.

[Note that the context.* methods are for the Node 0.10 version of Lambda only which is now deprecated. You can see how to transition to Node 4.3 Lambda here.]

I do find the 30 - 60 second wifi association time for the IoT button makes this not the best fit for this purpose - I’m often in a hurry to leave the house and want close to instant feedback to my request to turn off Sonos. Because of this I now use a voice command with my Echo - ‘Alexis, turn off Sonos’ - which uses a project that provides an emulation of a Hue bridge interfacing with the echo-sonos endpoint. This is a complete in-house-network solution (the Echo detects the Hue bridge emulator as a home automation device) so latency is very low. I’ll post more details about this another time.

Thanks again to Harry for helping with IoT setup and logging and to Jinesh for prompting me to post an update.

Thu, Apr 14, 2016

AWS is 10! Werner Vogels, AWS CTO, shared his 10 lessons learnt over that time.

Docker strategy deemed overly ambitious by users, partners. The users of Docker that I encounter are happy with it but want to use a range of tools - picking the best of each for their needs - e.g. Kubernetes over Swarm, etc. So long as the base Docker product continues to develop I see no harm in that. It’s like the Google strategy of throwing a bunch of ideas out and killing the ones that don’t get mass adoption. Better that than being a single product company that gets ‘Sherlocked’.

The publishers of the excellent AWS certification courses, A Cloud Guru

, show us behind the scenes of their serverless infrastructure. They use Firebase which does appear to have a concerning amount of downtime and/or the inability to do rolling upgrades.

Still no Google data centres in Australia. Does it matter? Yes, for latency sensitive applications. For data sovereignty, not so much - if you go with a US company don’t be surprised to find the region of adjudication is the US regardless of where you store your data.

Managing Digital Assets in a Serverless Architecture - a decent deck from AWS and Vidispine

Another deck on serverless architectures from the AWS Lambda Product Manager. I really wish I could find a recording for this one - anyone?

Google Stackdriver is a combined logging analytic and dashboard for GCE and AWS.

Immutable deployments with AWS Cloudformation and Lambda. Next level CFN work here.

IAM Docker - one issue with Docker containers is that multiple containers on one EC2 host share a role. This tool is effectively a ‘proxy’ for IAM role requests from containers, allowing different roles to be assumed.

A quick start guide for Puppet in AWS. Includes a Cloudformation template and installs a 3.8.6 Puppet master and Windows and Linux agents. Still no OpsWorks Puppet eh.

Creating cross account permissions for AWS CodeDeploy.

A decent overview of SQL and NoSQL from Ars.

A laudable attempt to collect post incident reports - there is much to learn from history

Manage your AWS infrastructure with Amazon Alexa. At last my dream has come true - ‘Alexa, terminate all the untagged EC2 instances’.

Yeobot

is a SQL command line for AWS implemented as a Slack plugin. ChatOps lives.

Alexa Voice Service running on the Raspberry Pi.

181PB in optical cold storage. Scale, and then some.

Thu, Apr 7, 2016

Every presentation needs a Simpsons reference.

On 6th April I gave a presentation at the AWS Sydney Meetup on AWS Billing and Cost Control - how to identify usage, reduce resource cost and design for lower cost.

The presentation went well although I did skip some of the speaker notes due to lack of time. There is a lot of information in the notes (the slides are mostly wordless - a style I’m testing - any feedback?) so I would recommend keeping the notes open while going through the deck. The Notes button is underneath the slide presentation on the main SlideShare site.

I’ve given more details about billing tools in this post.

I had some great conversations after the presentations - thanks to everyone who came to ask follow up questions or just chat.

Thanks also to Gary and Darrell from PolarSeven for organising another awesome Meetup. Be sure to consider PolarSeven for your AWS consultancy requirements, they do great work and we need to support the companies that put their time and money into these events.

SlideShare link: AWS billing and cost presentation - AWS Sydney Meetup April 2016

Wed, Mar 30, 2016

On 6th April 2016 I’m presenting at the AWS Sydney meetup on the subject of AWS cost control. It will be exciting.. honest! Designing and implementing solutions in a cost effective manner is a part of architecture that I find fascinating and a real challenge.

As part of the presentation I will be mentioning several tools which either I use to help manage cost or that I know others use, so I’m using this post as a way to collate these tools for easy reference.

Tagging tools

For enforcement of volume and snapshot tagging we use

Graffiti Monkey. This looks at instances and propagates the instance tags to all associated EBS volumes. It also propagates EBS volume tags to snapshots.

I have heard good things about the tagging tool

auto-tag from

GorillaStack - this uses Lambda and Cloudtrail to automatically tag resources with the creator’s IAM account name.

Resource cycling tools

Fairfax have open-sourced two tools in this area,

Cloudcycler and

Flywheel. I’ve previously written a

post on how we use these tools.

Other resource cycling tools that I am aware of but have not used:

ParkMyCLoud

GorillaStack

Skeddly

There are also a heap of EC2 cycling projects on Github but IMO Cloudcycler is the best one. You can also use native autoscaling scheduled actions.

Cost analysis tools

Evan Crawford (billing guru at AWS) has some

templates to help import your DBR files to Redshift for further analysis and to create dashboards. Take a look at his other repos while you are there.

Another AWS staffer, Javier Ros, has a

reserved instance balancer tool. This looks at your current RI purchases and instance usage and recommends splits, joins or AZ moves so usage matches purchases more closely. It can optionally automatically apply the recommendations. This is a rather finicky tool to get running and is a tad buggy particularly if you have a lot of instances and reservations (and hence a large DBR file).

Other tools that I use:

AWS supply

Trusted Advisor however you need a business or enterprise support contract to get the most useful cost checks and recommendations.

If you have an enterprise AWS support contract your TAM should be supplying you with RI recommendation reports, billing reports etc, reviewing them with you regularly, and providing other ways to save costs.

I use

CloudCheckr to cross check the Trusted Advisor RI recommendations and for RDS, Elasticache and Redshift RI recommendations (which TA does not supply). This is a commercial SaaS solution.

I have previously used

Cloudability and they do have a better looking product (the dashboards are particulary pretty) but the cost and security bundle in CloudCheckr currently fits our needs better.

If you know of any other useful cost control or analysis tools please let me know using the contact details in the sidebar.

Tue, Mar 1, 2016

A quick note to say I’m speaking at the AWS Sydney meetup

in April with a talk on cost control. I hope to see you there, come say hello. I’ll also put a link to the slides up here too.

Here’s the latest selection of tech articles that caught my eye - there’s a security and serverless focus this time.

Industry Best Practices for Securing AWS Resources - essential reading if you are responsible for AWS security, or even just to properly secure your own personal account.

How to Use AWS WAF to Block IP Addresses That Generate Bad Requests - uses Lambda, Cloudfront logs and CloudWatch. It will be interesting to see what throughput the Lambda function can get in parsing Cloudfront logs - the 5 minute Lambda runtime may not be long enough for larger site logs.

From Monolith to Microservices — Part 1: AWS Lambda and API Gateway - a great primer on serverless architecture.

Getting Started with AWS Lambda (Node JS) - Tom’s follow up article on NodeJS and Lambda.

Raspberry Pi 3 on sale now at US$35 - built in wifi and Bluetooth making this an all in one IoT device.

Control your Keurig B60 through your Amazon Echo, using the Alexa Skills Kit and AWS IOT service - some hardware hacking required.

AWS Latency - check latency to AWS services in various regions from your browser.

Package, reuse and share particles for CloudFormation projects

Service Oriented Architecture with AWS Lambda: A Step-by-Step Tutorial

Claudia - ‘Claudia helps you deploy Node.js microservices to Amazon Web Services easily.’

A Billion Taxi Rides in Redshift - analysing NYC trip data.

Photo credit: www.perspecsys.com

Thu, Feb 18, 2016

Lots of Serverless news this time - it really has momentum

Building a serverless anagram solver - nice, would have been good to see a cost comparison.

EFS benchmarking - rumour has it EFS is delayed because of performance issues with small files and these benchmarks from the preview service look to back that up.

Monitoring the performance of BBC iPlayer using Lambda, Kinesis and Cloudwatch

I’m not a fan of David Guetta but this serverless recording campaign architecture is very clever.

A serverless REST API in minutes

The Guts of a Serverless Service - part three of an excellent ongoing series.

Meshbird:

“Meshbird create distributed private networking between servers, containers, virtual machines and any computers in different datacenters, different countries, different cloud providers. All traffic transmit directly to recepient peer without passing any gateways. Meshbird do not require any centralized servers. Meshbird is absolutly decentralized distributed private networking.”

Here’s a horrible idea. Link up the Simple Drone Service to Facebook’s database of faces (to which you handily contributed by tagging your friends in photos) and bang, automatic mobile person finder.

When Agile is the wrong choice for your organisation. Some push back on the ‘Agile for all’ idea.

I use Lucid Charts but Cloudcraft looks like a great alternative and is 3D. Having pricing info available in the design diagram is great.

AWS Pricing API

Resco is a SPA matching reservations to running instances.

Picking the right data store: one of many great articles coming through the AWS Startup Medium blog.

Thu, Feb 4, 2016

Serious business.

At yesterday’s AWS Meetup in Sydney I presented on ‘Growing Up with AWS - 5 ways to ease the pain’ - a short talk about what we have learnt when scaling from nothing to a multi-million dollar AWS deployment. Here are the slides.

If you click through to the presentation on SlideShare you can see the speaker notes which will help. I’m not generally one for wordy slides.

Thanks once again to Polar Seven for organising and supporting the meetup.

Tue, Jan 12, 2016

Image credit: Southern Illinois University Student Center (http://www.siustudentcenter.org) [Public domain], via Wikimedia Commons

A bumper selection this week as I’m still catching up from the Christmas break.

How to build a successful AWS consulting practise - something that’s on my mind for this year.

AWS Shell is a fantastic autocomplete AWS CLI shell.

Postgres 9.5 feature set - includes upsert

A Lambda function to check SSL certificate expiry. Maybe checking the IAM certificate store would be better for AWS hosted solutions. I see this is also in the new set of Trusted Advisor checks.

Receiving IoT messages in your browser - uses the realtime.co messaging platform.

I am surprised that t2.nano instances support EBS encryption. Perhaps this will be a default feature from now on?

Custom CloudFormation resources for API Gateway

Pyutu - a Python library for the AWS Pricing API

AWS VPC NAT Gateways depend on EC2 Enhanced Networking

How to Automatically Update Your Security Groups for Amazon CloudFront and AWS WAF by Using AWS Lambda

Thu, Jan 7, 2016

HOWTO: Launch AWS Marketplace AMIs as Spot instances

This post assumes you know about AWS Spot instances and the AWS Marketplace - in short, Spot instances allow you to bid on spare AWS EC2 instance capacity for (usually) a much lower price, and the Marketplace allows you to create instances based on software images supplied by third parties. For example a network software vendor may supply their firewall software as a Marketplace image (known as an AMI - an Amazon Machine Image) and from that AMI you can create a Spot EC2 instance (a server) for a greatly reduced cost compared to the normal EC2 prices.

A colleague planned to spin up an instance based on a Marketplace AMI for testing and asked me if I had any advice. ‘Launch it as spot?’ I suggested and he tried but reported back that he couldn’t as the Spot option was not selectable in the AWS console. My first thought was ‘oh he must be choosing an instance type that doesn’t support Spot’ - not all instance types support being run as Spot - but he was using a c3.xlarge which is on the list of supported instance types. I tried it myself with a different Marketplace AMI and sure enough the Spot check box was inactive.

After some research I found there is a way to launch these AMIs as Spot instances but it is not so obvious so I have detailed it here.

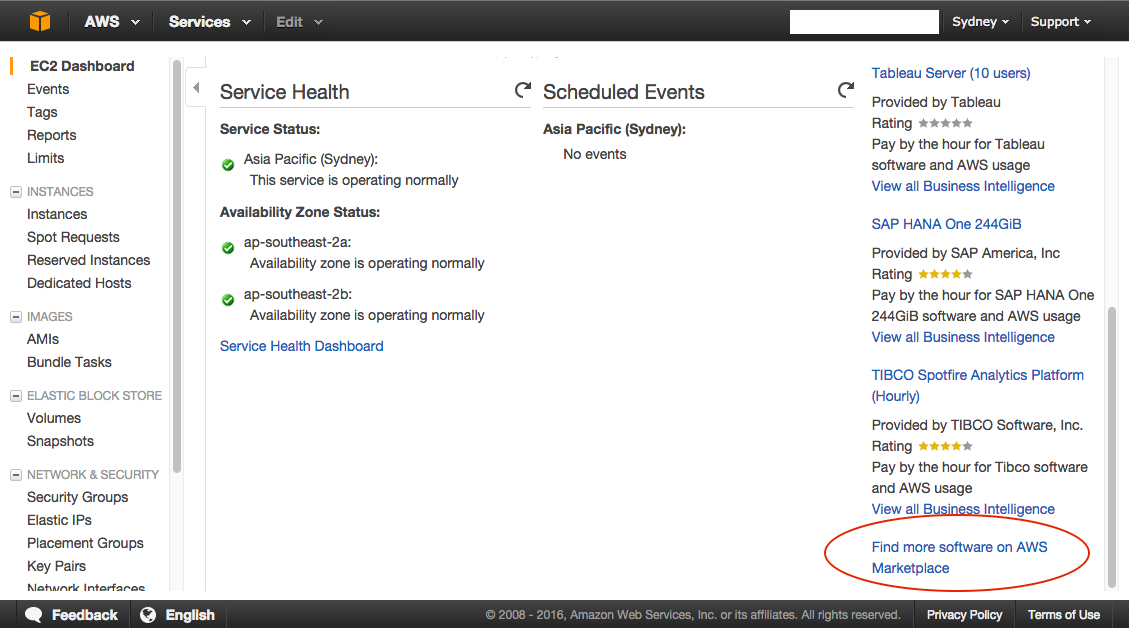

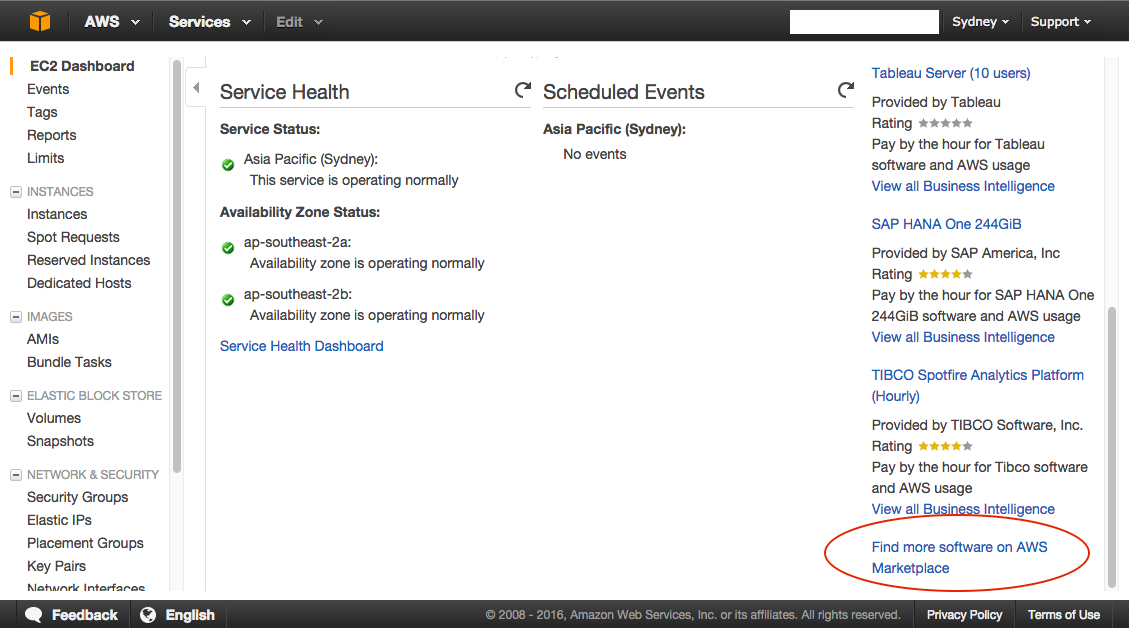

- Sign into your AWS console and navigate to the EC2 section.

- Select the link to the Marketplace on the bottom right hand side.

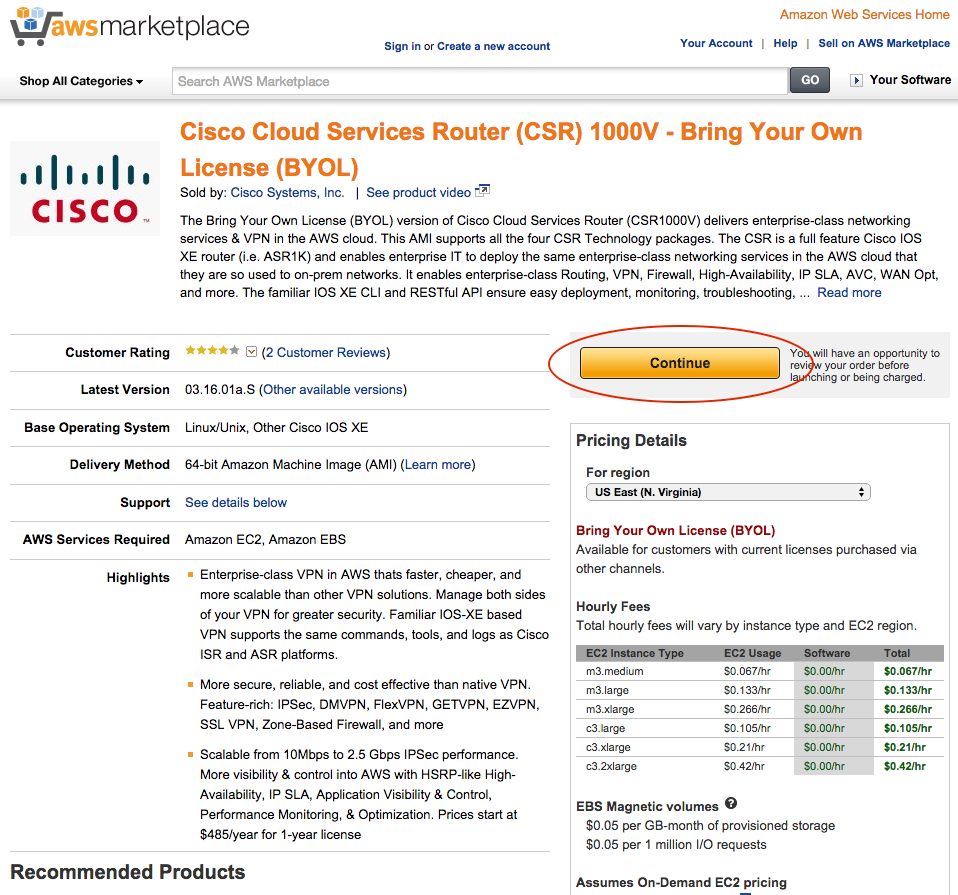

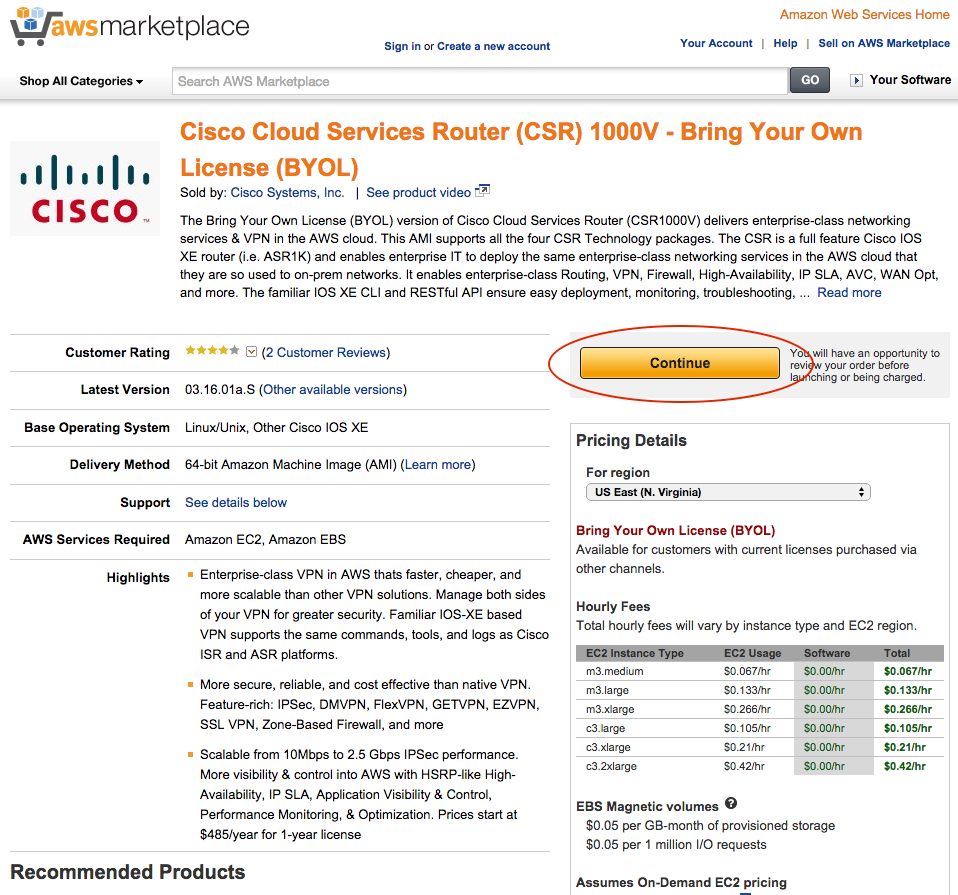

3. Find the Marketplace AMI which you want to use. For this example I am using the AMI “Cisco Cloud Services Router (CSR) 1000V - Bring Your Own License (BYOL)”. Some AMIs include a fee for running the software on top of the fee for running the EC2 instance, others are Bring Your Own License (BYOL) meaning you have to own or purchase a license separately. Select your region, review the costs and click Continue.

3. Find the Marketplace AMI which you want to use. For this example I am using the AMI “Cisco Cloud Services Router (CSR) 1000V - Bring Your Own License (BYOL)”. Some AMIs include a fee for running the software on top of the fee for running the EC2 instance, others are Bring Your Own License (BYOL) meaning you have to own or purchase a license separately. Select your region, review the costs and click Continue.

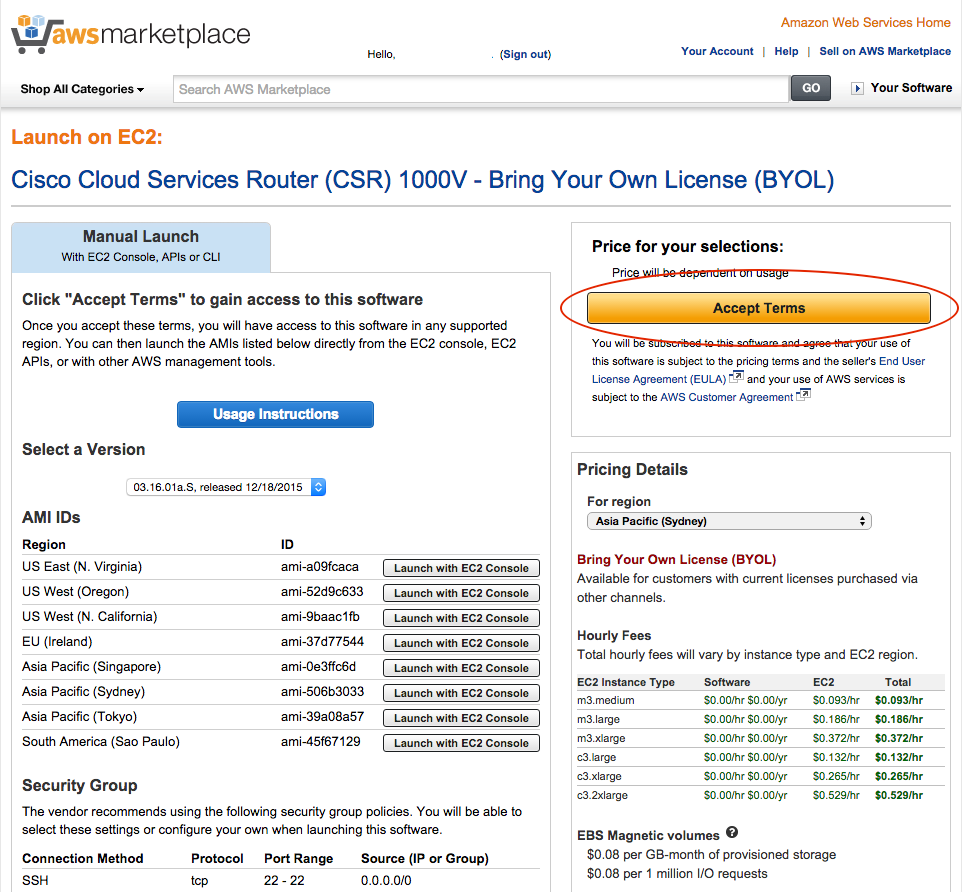

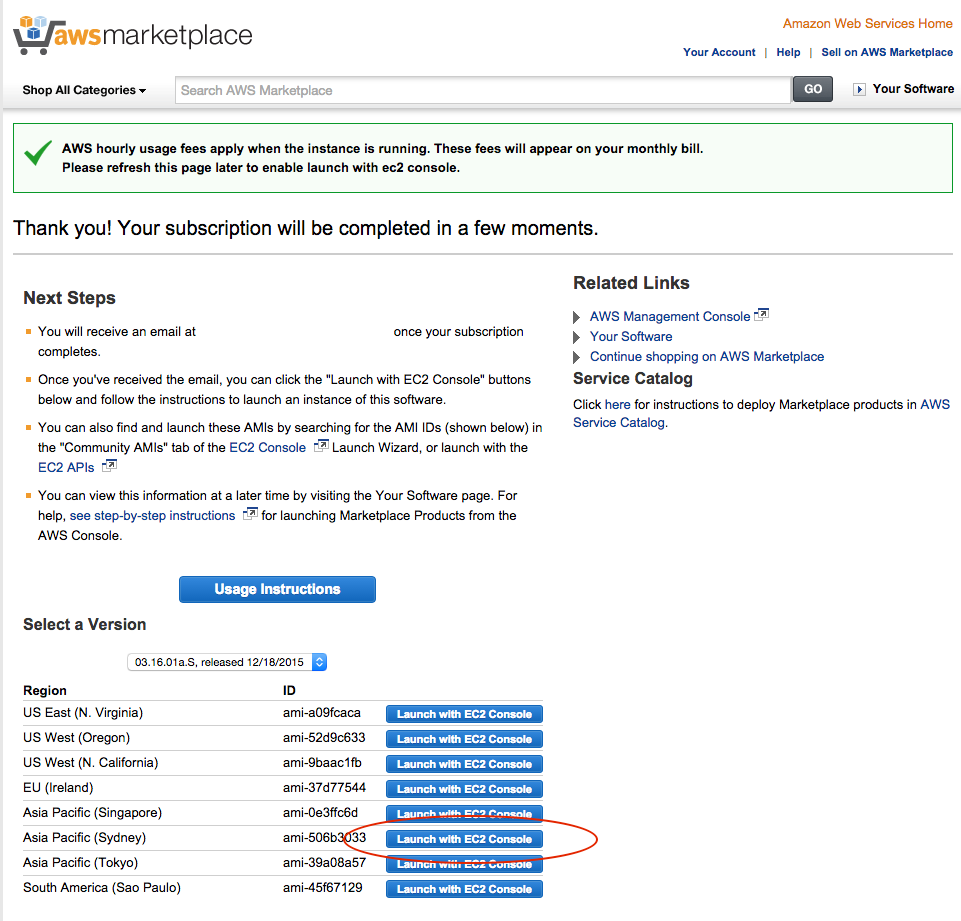

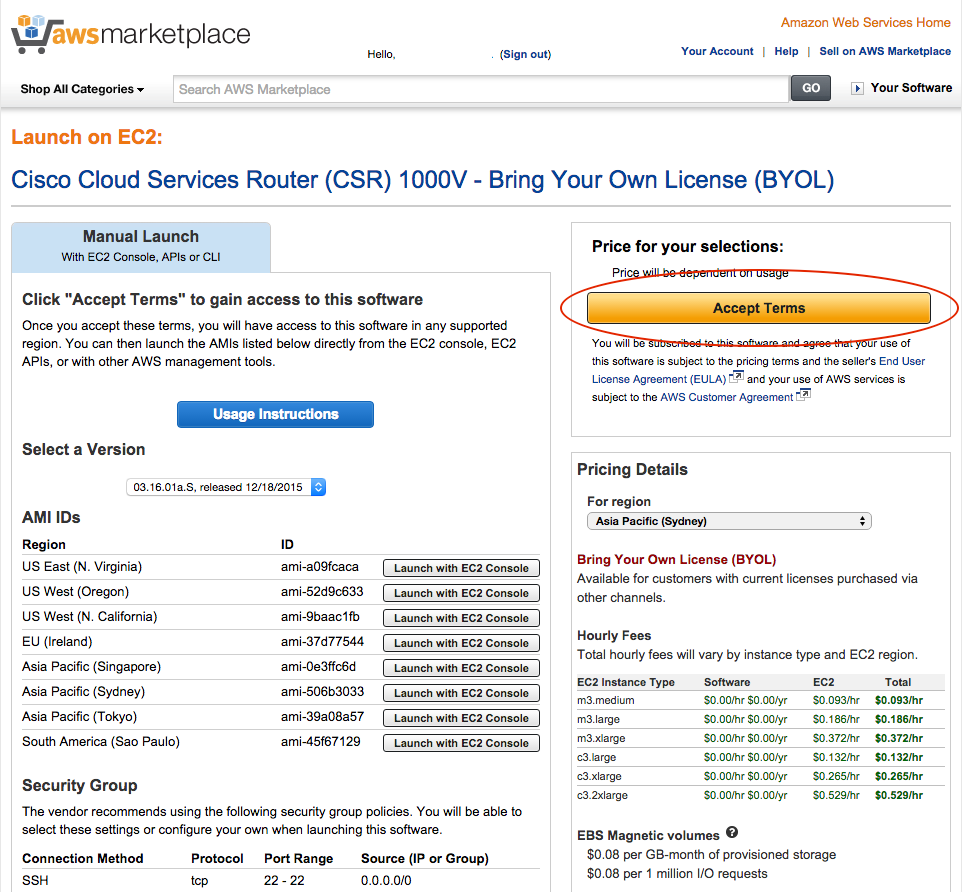

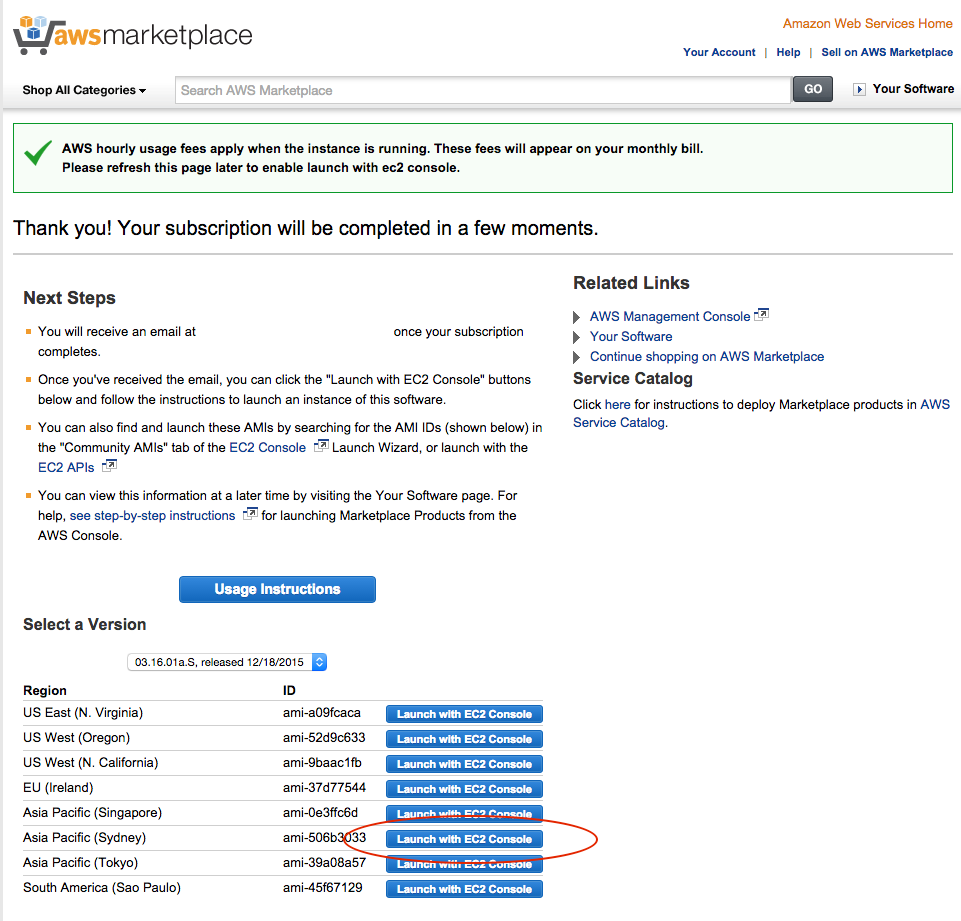

4. You have to ‘subscribe’ to access the AMI. This means accepting the terms and conditions for running the vendor software (and means that AWS can notify you if there is an issue with the AMI software, such as a security update). You can see that the ‘Launch with EC2 console’ links are inactive meaning you must accept the terms to continue. Click the ‘Accept Terms’ button.

4. You have to ‘subscribe’ to access the AMI. This means accepting the terms and conditions for running the vendor software (and means that AWS can notify you if there is an issue with the AMI software, such as a security update). You can see that the ‘Launch with EC2 console’ links are inactive meaning you must accept the terms to continue. Click the ‘Accept Terms’ button.

5. You will be told to refresh the page. Do that. The ‘Launch with EC2 console’ links will now be active. Click the link next to the region in which you want to launch the instance - I chose the Sydney region.

5. You will be told to refresh the page. Do that. The ‘Launch with EC2 console’ links will now be active. Click the link next to the region in which you want to launch the instance - I chose the Sydney region.

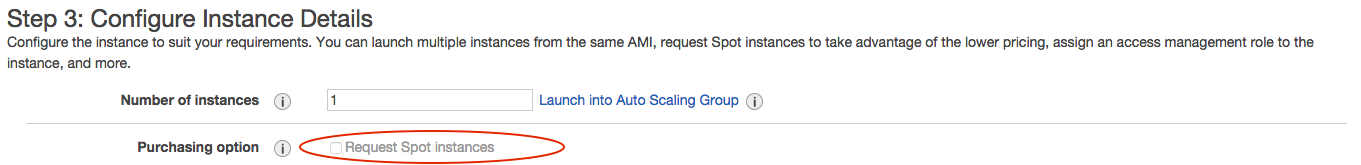

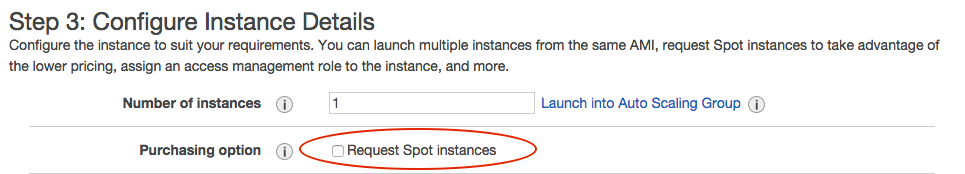

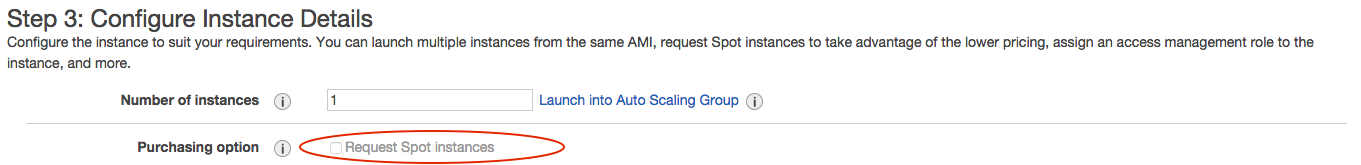

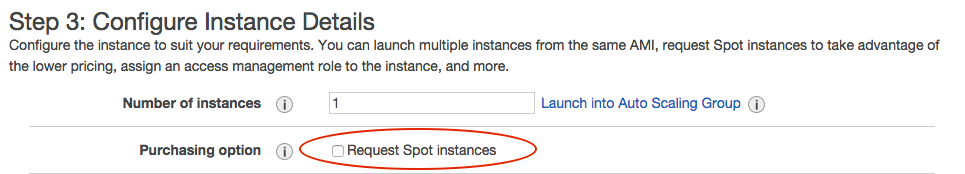

6. You can now continue with the normal EC2 launch wizard and this time the ‘Request Spot instances’ check box is available.

6. You can now continue with the normal EC2 launch wizard and this time the ‘Request Spot instances’ check box is available.

And that’s it. CLI users, you have to subscribe to the AMI in the console - there does not appear to be a way to subscribe from the CLI. Once you have subscribed you can launch via the CLI as normal.

Mon, Jan 4, 2016

Round-up for 4th January 2016

Happy New Year!

Hot cross buns on display at my local supermarket - January 3rd. Relentless.

Hot cross buns on display at my local supermarket - January 3rd. Relentless.

Here’s my latest collection of interesting articles and links. Not all are new - just new to me (and maybe you).

The 12 Days of Lambda - a collection of nice ideas for Lambda functions - however I strongly recommend having a break over the holidays and NOT spending the time doing IT at home. Go for a walk, visit a museum, dig your yard… get away from the screens.

AWS Serverless multi-tier architectures - a good primer on combining API Gateway, Lambda and various AWS database options to produce a ‘traditional’ multi tier solution without servers.

The Serverless start-up - an excellent article on (again..) using Lambda, API Gateway and DynamoDB to build a cheap but flexible and scaleable site.

Integrating SignalFX, API Gateway, Lambda and OpsGenie - a great walkthrough, particularly of the concepts and setup of API Gateway.

Certificate Revocation Checking - just before Christmas, Google deprecated a Symantec root certificate in Chrome, saying it had been revoked. This page allows you to check the revocation status of the certificate (and chain) for any site.

rclone is a command line tool for copying and syncing files between S3, Google Drive, Dropbox etc.

Tracking baby data with AWS IoT - cool hack, Arduino is something else I wish I had the time for.

CloudSploit convert from EC2 to Lambda & API Gateway

Why we chose Kubernetes over ECS - at Fairfax we went through a similar process with the same result.

Wed, Dec 16, 2015

Christmas comes but once a year

Now it’s here, now it’s here

Bringing lots of joy and cheer

Tra la la la la

You and me and he and she

And we are glad because

because because because

There is a Santa Claus

Christmas comes but once a year

Now it’s here, now it’s here

Bringing lots of joy and cheer

Tra la la la la

Unlike Christmas, the AWS bill comes every month and does not generally bring joy and cheer. In this post I want to share details on two tools that we use at Fairfax Media to reduce our AWS costs all year round, and a simple action you can take to reduce your bill even more over the holiday period.

Like so many other companies, we have been through the AWS boom times - ease of use leading to rapid expansion of cloud resources and the resultant billing - and the bust - when those bills start becoming so significant they attract attention from the finance department. To help reduce our costs we have developed a couple of tools and both have been open sourced on our GitHub page.

The tools were both written by the very smart David Baggerman and are called ‘Cloudcycler’ and ‘Flywheel’.

Cloudcycler allows you to shut down and start up AWS resources on a scheduled basis. There are quite a lot of tools around that do this but none met our needs. In particular Cloudcycler allows us to:

- shut down EC2 instances

- shut down RDS instances

- shut down entire CloudFormation stacks (and all their resources)

- snapshot RDS volumes on shutdown

- start up resources, including from snapshots (e.g. restoring RDS data)

- start up resources from CloudFormation templates

We use Cloudcycler to turn off the majority of our non-production environments outside of office hours. The default configuration is to only run these environments from 08:00 to 17:00 weekdays - with some exceptions for environments that are used by offshore development teams. This produces significant savings - in a 30 day month there are 720 hours in total. Of those 30 days, around 22 are working days so we now run these environments for 22 (days) * 9 (hours) = 198 hours - a saving of 522 hours - or a cost of just 28% of the full monthly running cost.

Flywheel takes this a step further. Turning on non-production resources on a scheduled basis is better than having them running full time, but even better would be to not have them running at all until they are needed. Flywheel does this by being an EC2 ‘proxy’. Instances controlled by Flywheel are by default shut down. Flywheel provides a simple web site with one-click startup for those instances, so the end user, such as a developer or tester, can easily start up the instance on demand. After a (configurable) time period the instance is automatically shut down again. A particular use case is for test instances which are only required to be on for an hour or so during the day.

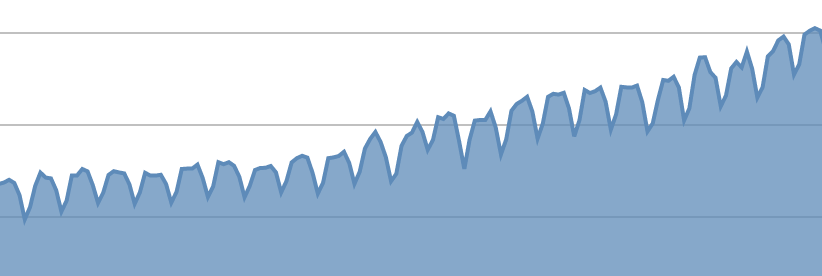

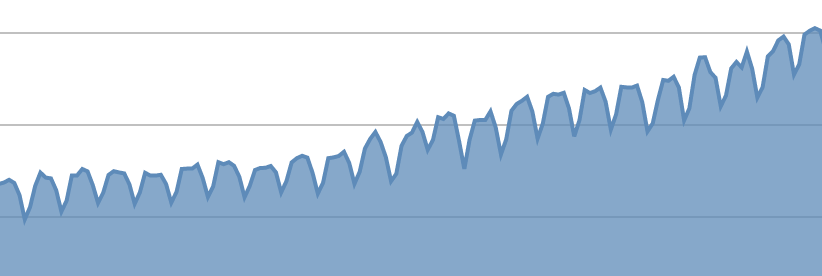

Using both of these tools has significantly reduced our AWS running hours and therefore our costs. This graph, taken from CloudCheckr (our cost & security tool of choice) shows the impact that Cloudcycler has on our running instance hours over time.

Yes, it is an overall upward trend as we add more projects to AWS but you can clearly see the effect of turning off resources (particularly clear are the dips at weekends).

Yes, it is an overall upward trend as we add more projects to AWS but you can clearly see the effect of turning off resources (particularly clear are the dips at weekends).

Onto the special December/January cost saving tip.

Fairfax Media encourages staff to take leave around Christmas. There are a few reasons for this - it is partly a relic of the old print days when presses were run with a skeleton staff over Christmas, partly a concern for staff welfare, and no doubt partly to reduce the financial liability from some staff having accrued high amounts of leave. Whatever the reason, we can use this to our advantage in saving AWS costs. We also, like many large companies, have a change freeze in place over the holidays.

Adding all these together, we spoke to our development teams to let them know that there would be a new Cloudcycler schedule in place from Monday 21st December to Friday 8th January 2016 inclusive. During that time, environments will be turned off completely, all day. This is an opt-out system - by default all systems are in scope and exceptions can be added on demand, for the small number of projects which will be active over this time.

This has the potential to save 15 (days) * 9 (hours) = 135 hours of running costs over the Christmas period - a good saving gained just by sending a few emails and changing a configuration file.

Why not contact your development teams and see if you can do the same?

P.S. excluding Christmas there are seven weekday public holidays in New South Wales, Australia in 2016 - I would welcome a pull request to the Cloudcycler repo with changes to make it read those dates (from a static file and/or a web service) and extend the scheduler so those days are treated as weekend days.

Mon, Dec 7, 2015

Round-up 7th December 2015

Yellow Lab Tools is a great web page speed tester with suggestions for improvement. Here’s the result for this site. 91 is great but I’m not sure why I’m getting penalised for lack of caching, it is set up in Cloudfront so I need to check further. But hey, an A is an A :)

The Oncoming Train of Enterprise Container Deployments - an excellent article that echoes a lot of my concerns around Docker. I see Docker as enabling, improving and enforcing development and release practices but not solving your existing problems as if by magic. If you aren’t doing green/blue deployments now, if you are not using CloudFormation to build replaceable infrastructure, why will deploying Docker suddenly improve your processes?

Free access to PluralSight courses via Microsoft - some excellent content in there.

Browser based visualisation of Docker layers

S3Stat - log analysis for S3 and Cloudfront logs - I’m trialling this for this blog and will write up a review over Christmas.

PNG to ICO - I noticed (via S3Stat) that I had some 404 errors for favicon.ico - I only had favico.png. This did the conversion easily and for free.

Some of these are from the Devops weekly newsletter to which I recommend you subscribe.

Thu, Nov 26, 2015

This is a follow-up to my previous post on Let’s Encrypt and CloudFront.

I replaced the cert yesterday with one covering both www and the root domain. I just re-ran the command adding the second domain name:

./letsencrypt-auto certonly -a manual -d www.paulwakeford.info -d paulwakeford.info --server https://acme-v01.api.letsencrypt.org/directory --agree-dev-preview --debug

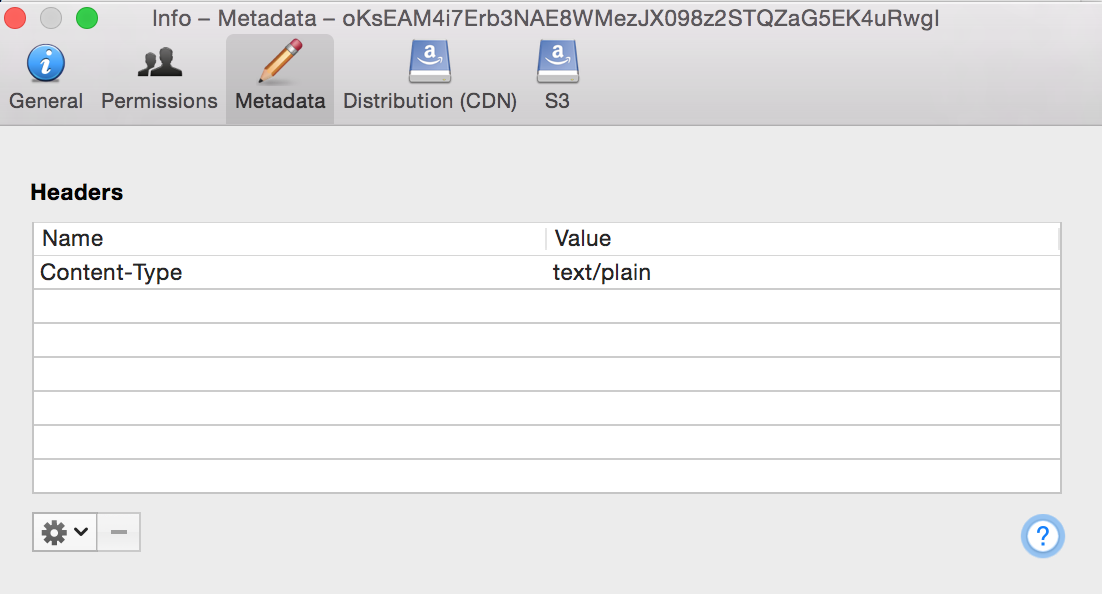

I had to turn off the CloudFront http to https redirector to get the validation done for paulwakeford.info, otherwise it was almost the same from step 9 onwards. This time I created the validation file as .txt, added the required content, then renamed the file — that way the content type was already set to text/plain. I also didn’t get the error message at step 12.

Wed, Nov 25, 2015

Interesting links for 25/11/2015

Fallout 4 pipboy relay

The Design Stamina Hypothesis. I’ve seen so many projects be delayed or fail due to lack of initial design or design rigour.

Reconsider. Not everyone has to be or wants to be a bearded hipster with a startup.

How to build an API in 10 minutes - uses API Gateway and Lambda.

Consigliere is a tool that makes AWS Trusted Advisor multi-account. Info is a little sparse right now, author looks to be adding support for sqlite DBs (currently redis only). Marked to come back to in a few weeks.

A reserved instances czar. I can attest to AWS cost control being close to a full time job. I only get time to update reservations quarterly which means we do lose out on some savings.

Mocking boto3 calls

Serverless apps with AWS Lambda and Algorthmia

Tue, Nov 24, 2015

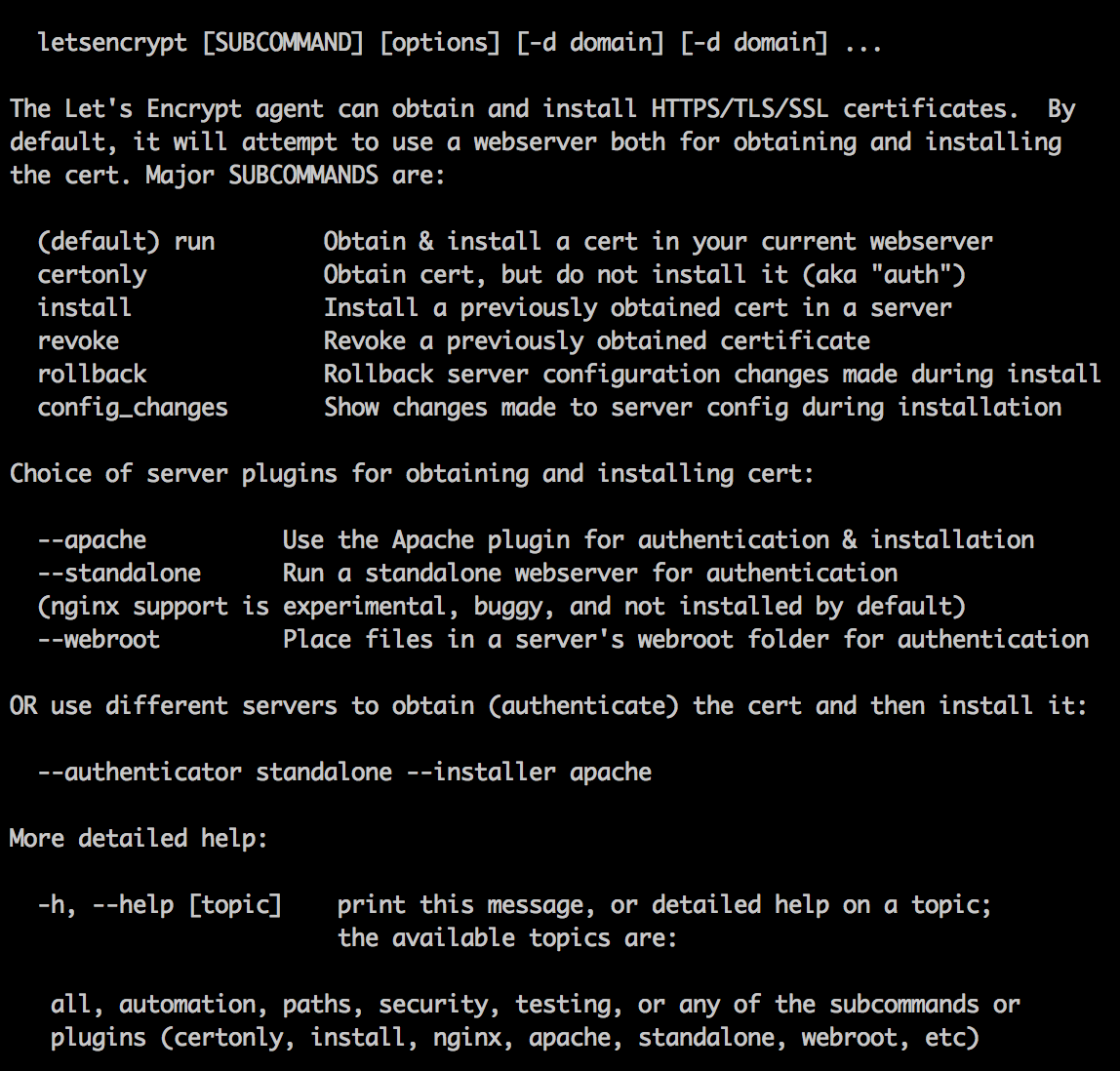

Last weekend I registered this domain, set it all up in AWS (S3 & CloudFront - I’ll post about that some other time) and registered for the letsencrypt.org closed beta (the open beta starts in December). Let’s Encrypt is an initiative to provide free SSL certificates, for anyone, anywhere. You can read up on the reasons and how it is done on their About page. Today I received an email saying I was accepted. Here’s how I set this up. My specific use case is for this blog which is hosted as a static web site in AWS S3 via CloudFront but the usage of the Let’s Encrypt tool will be much the same for other server software.

- You will be using sudo a lot — letsencrypt sets very restrictive permissions on the directories where your cert files will be stored. That’s a good thing, but sudo’ing all the time is a pain so I did

sudo bash temporarily.

Install:

git clone https://github.com/letsencrypt/letsencrypt

./letsencrypt-auto --server https://acme-v01.api.letsencrypt.org/directory --help

grep: /etc/os-release: No such file or directory

WARNING: Mac OS X support is very experimental at present...

if you would like to work on improving it, please ensure you have backups

and then run this script again with the --debug flag!

Well, OK then. What’s the worst that could happen?

./letsencrypt-auto --server https://acme-v01.api.letsencrypt.org/directory --help --debug

letsencrypt now installs a bunch of stuff via homebrew (which I had installed already): libxml2, augeas, dialog and virtualenv. If you didn’t sudo before you will get an error at the point of installing virtualenv: IOError: [Errno 13] Permission denied: '/Library/Python/2.7/site-packages/virtualenv.py'

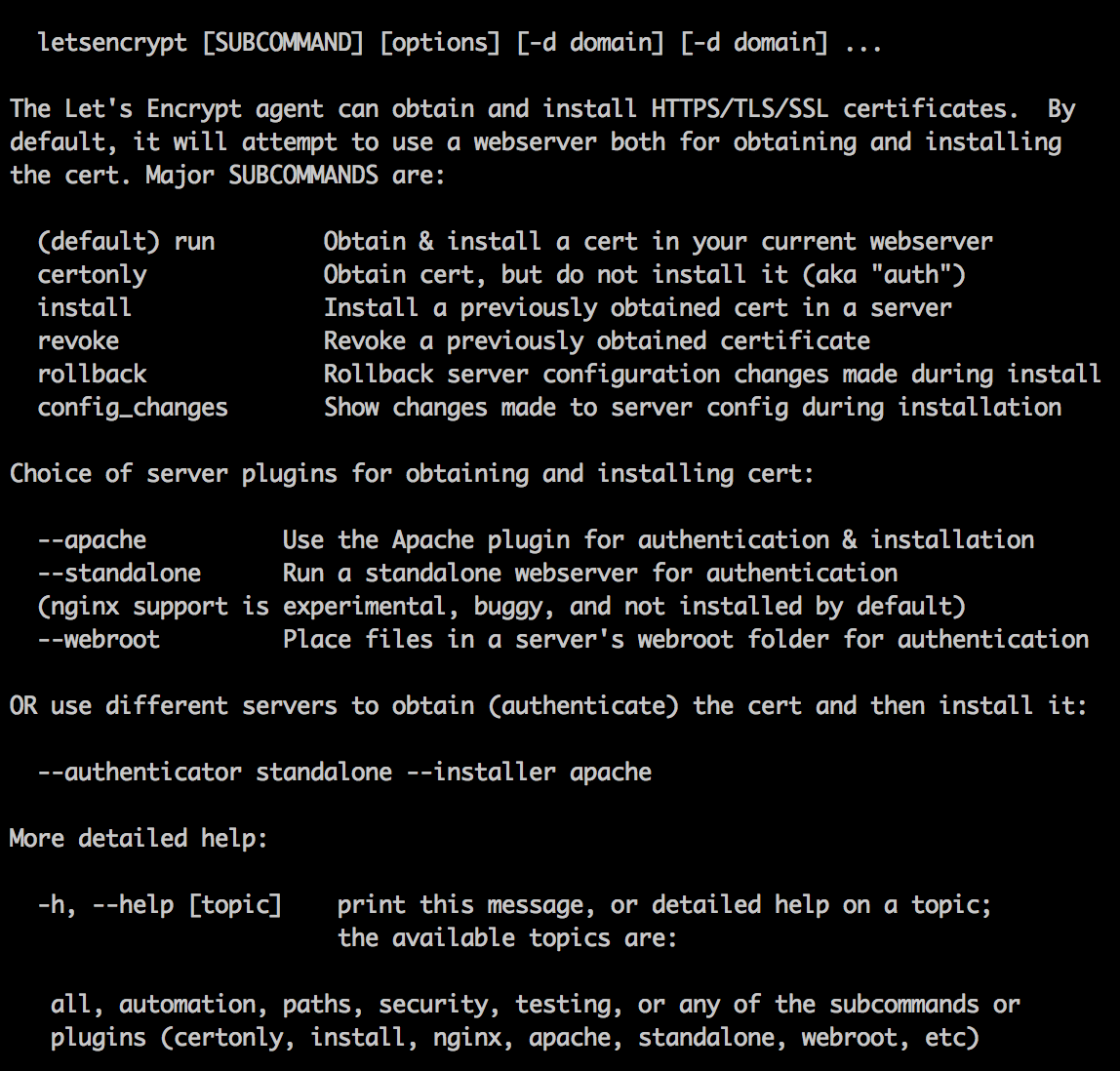

Yay, we get to the help screen:

OK, I am going to use this certificate with CloudFront which is not a supported web server plugin (the plugins allow you to run the cert application process on the destination server directly). No worries, the email I received says I can use the –manual option:

./letsencrypt-auto certonly -a manual -d www.paulwakeford.info --server https://acme-v01.api.letsencrypt.org/directory --agree-dev-preview --debug

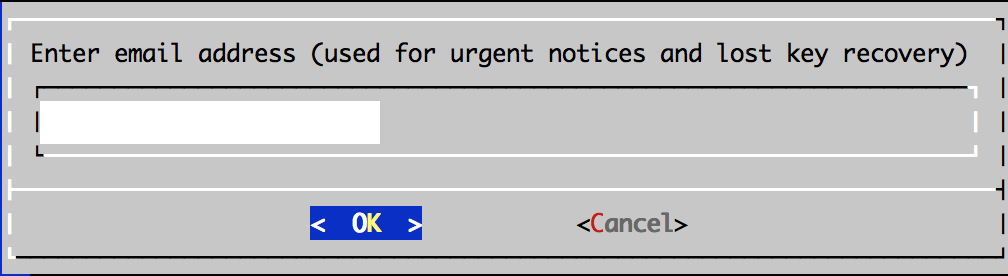

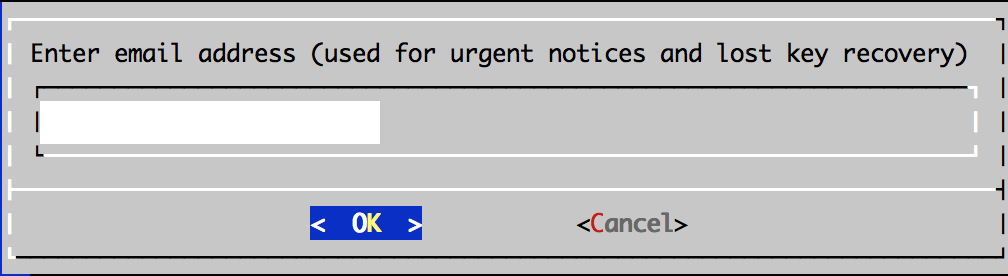

Enter email address.

Email is used to recovery, but back up your keys:

“IMPORTANT NOTES:

- If you lose your account credentials, you can recover through

e-mails sent to xxxxxxx@xxxxxxx.com.

- Your account credentials have been saved in your Let’s Encrypt

configuration directory at /etc/letsencrypt. You should make a

secure backup of this folder now. This configuration directory will

also contain certificates and private keys obtained by Let’s

Encrypt so making regular backups of this folder is ideal.”

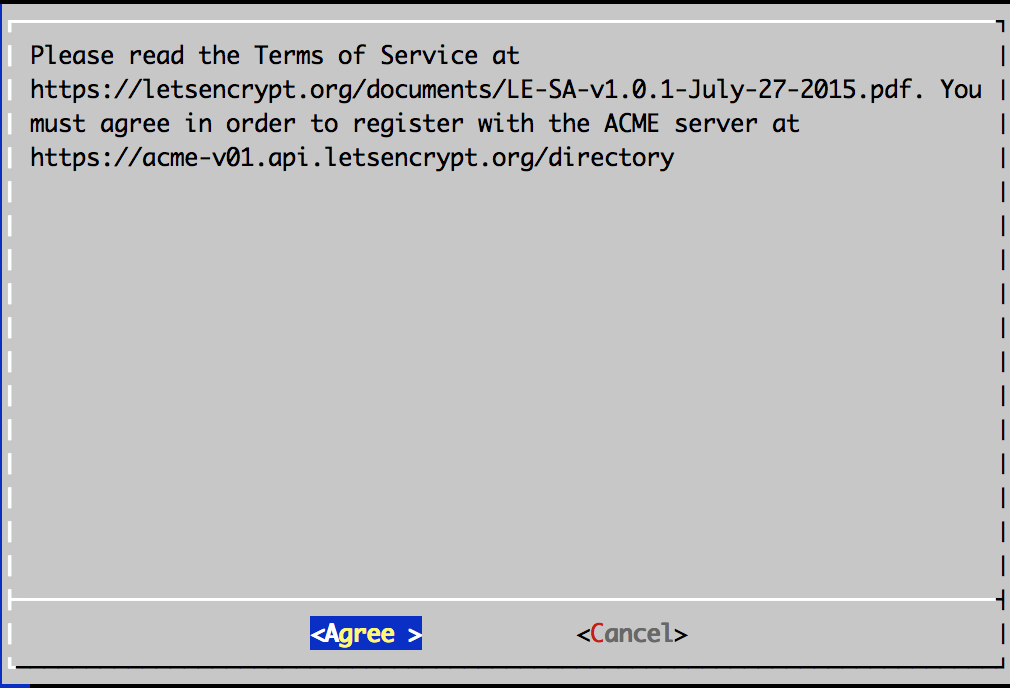

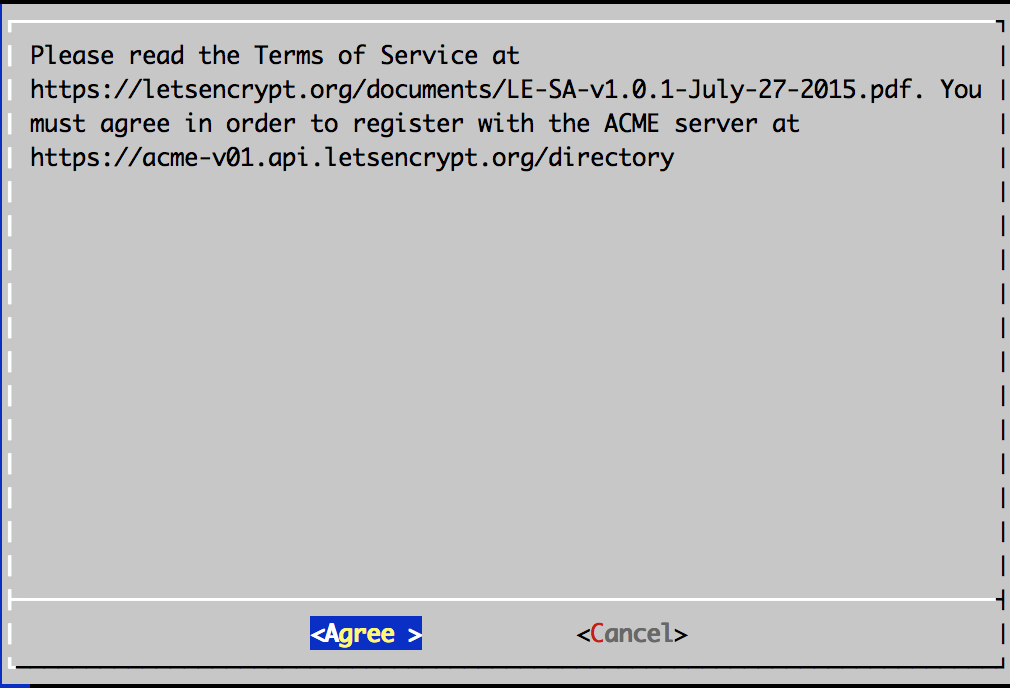

Accept terms.

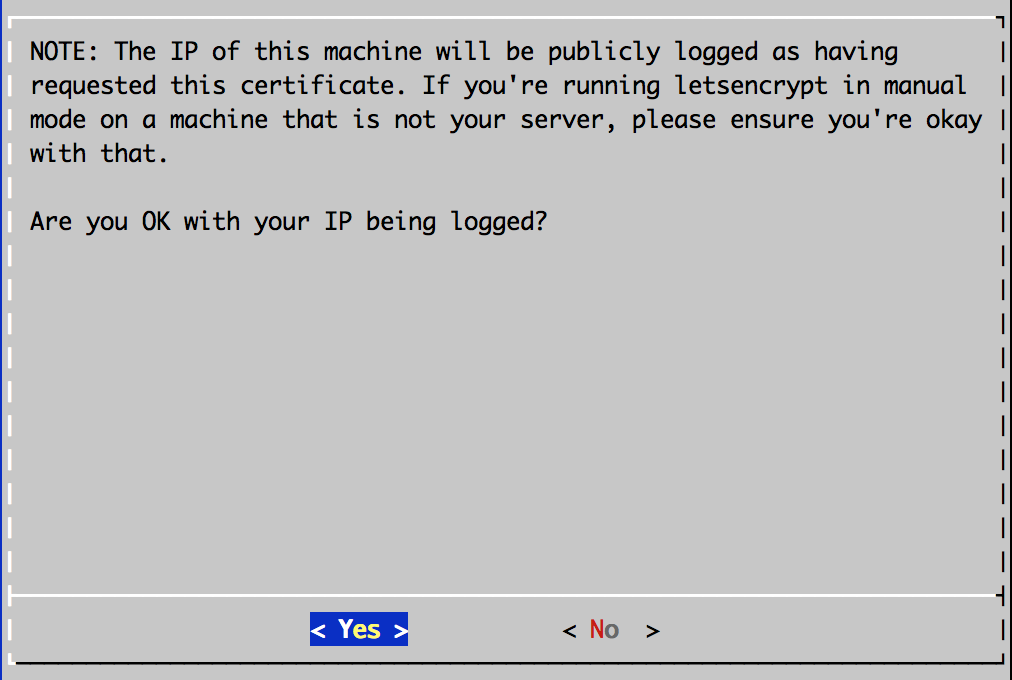

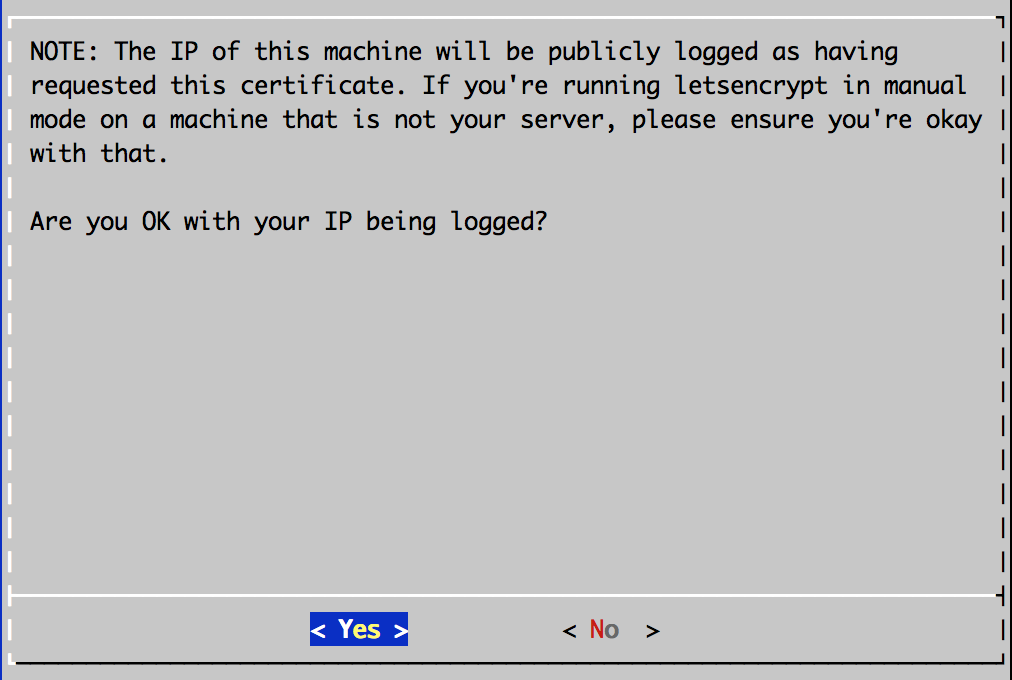

Your IP will be logged.

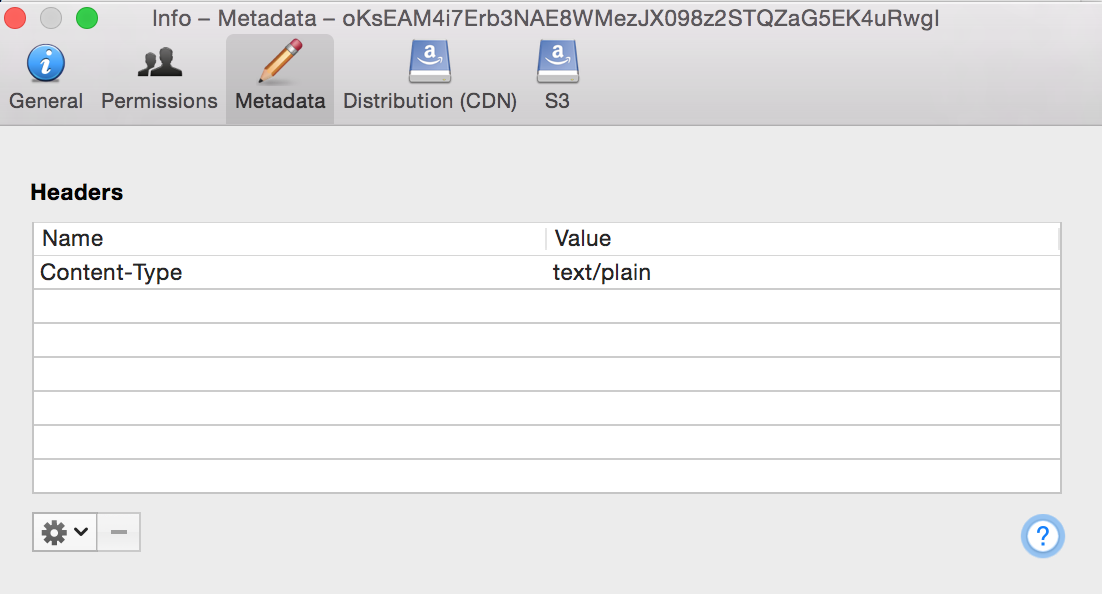

Next you need to prove you own the domain that will be covered by this SSL certificate. Let’s Encrypt does this by checking a URL on the server hosting that domain. You will be asked to create a file with a specific name and containing specific content. Instructions are given for this, including running a temporary HTTP server using Python, if required. I just created the file in the S3 bucket that hosts my web site and edited it to add the required content. You need to set the content-type to text/plain which my S3 file client of choice, Cyberduck, can do (right click, Info:  If you are running Let’s Encrypt on a supported web server (Apache etc) I’m guessing it will create this file and contents for you.

If you are running Let’s Encrypt on a supported web server (Apache etc) I’m guessing it will create this file and contents for you.

I got a failure message at this point  .. however also, a success message:

.. however also, a success message:

“IMPORTANT NOTES:

- Congratulations! Your certificate and chain have been saved at

/etc/letsencrypt/live/www.paulwakeford.info/fullchain.pem. Your

cert will expire on 2016-02-22. To obtain a new version of the

certificate in the future, simply run Let’s Encrypt again.”

Let’s check what we won:

bash-3.2# ls /etc/letsencrypt/live/www.paulwakeford.info/

cert.pem chain.pem fullchain.pem privkey.pem

Great, now we just need to import it into the IAM keystore in AWS:

aws --profile personal iam upload-server-certificate --server-certificate-name www.paulwakeford.info-ssl --certificate-body file:///etc/letsencrypt/live/www.paulwakeford.info/cert.pem --private-key file:///etc/letsencrypt/live/www.paulwakeford.info/privkey.pem --certificate-chain file:///etc/letsencrypt/live/www.paulwakeford.info/chain.pem --path /cloudfront/prod/

Which gives me this response:

SERVERCERTIFICATEMETADATA arn:aws:iam::1234567890:server-certificate/cloudfront/prod/www.paulwakeford.info-ssl 2016-02-22T01:48:00Z /cloudfront/prod/ ASCAJFRF4MINVWC72UNDW www.paulwakeford.info-ssl 2015-11-24T03:05:16.110Z

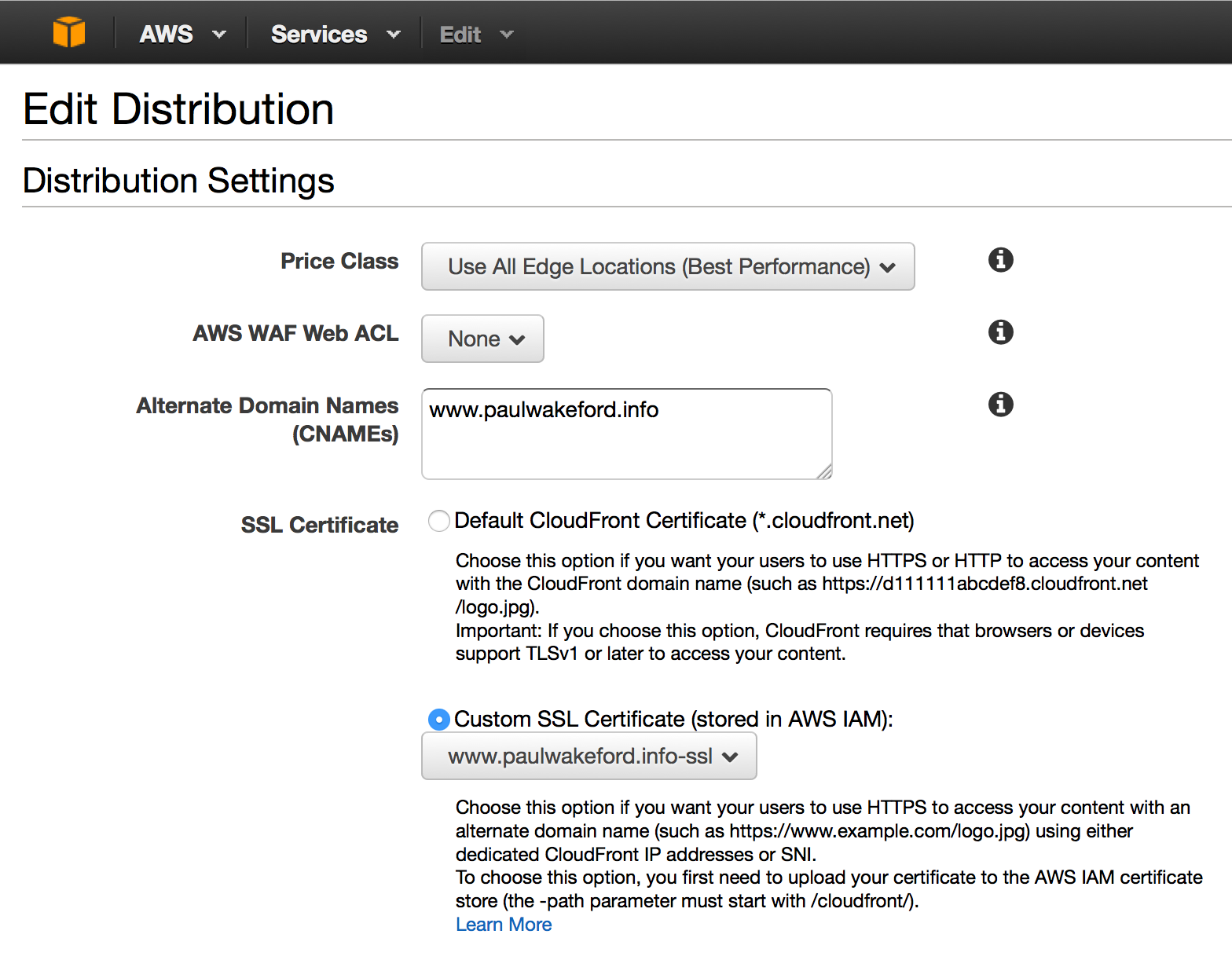

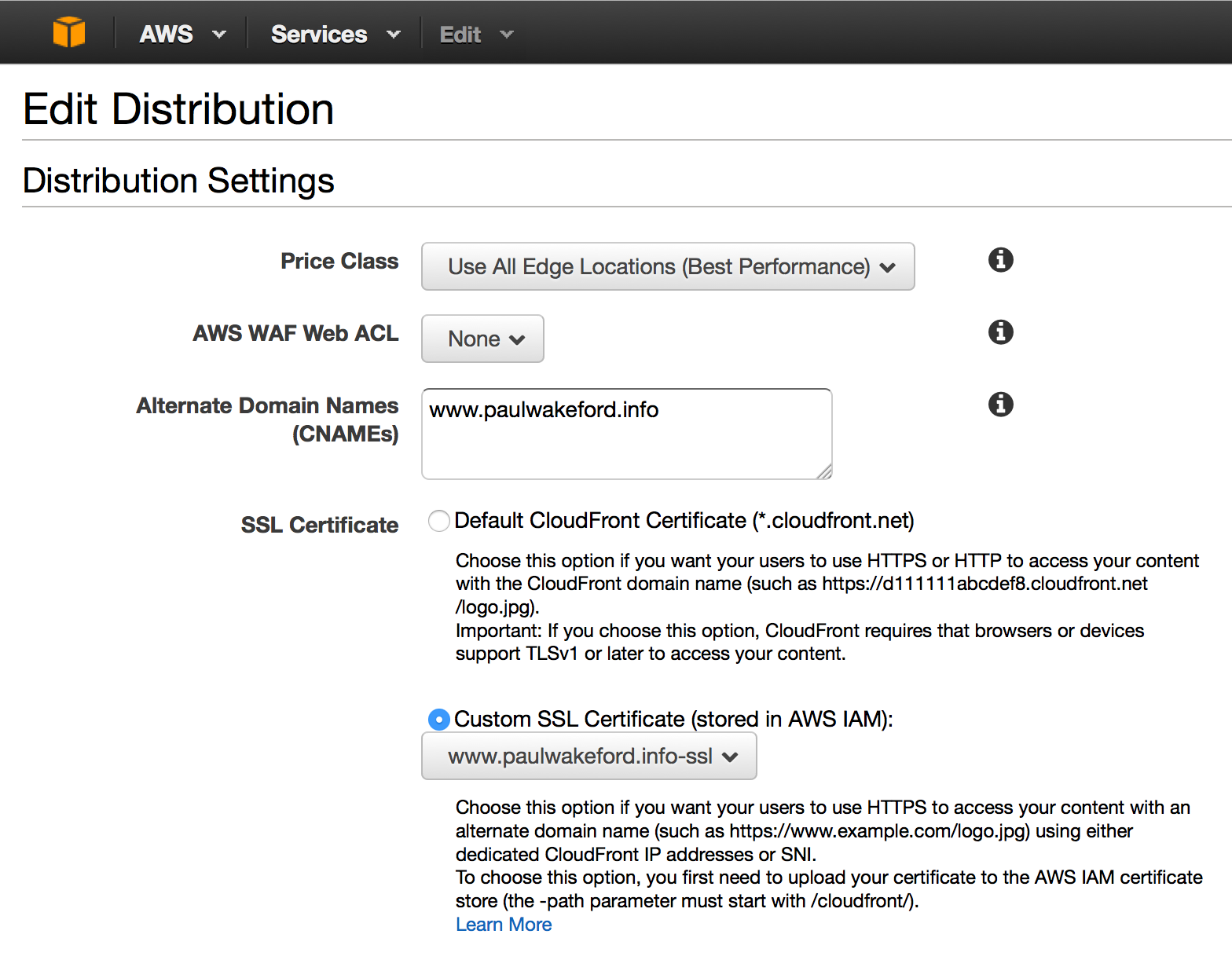

Now I edit my CloudFront distribution to specify my SSL cert:

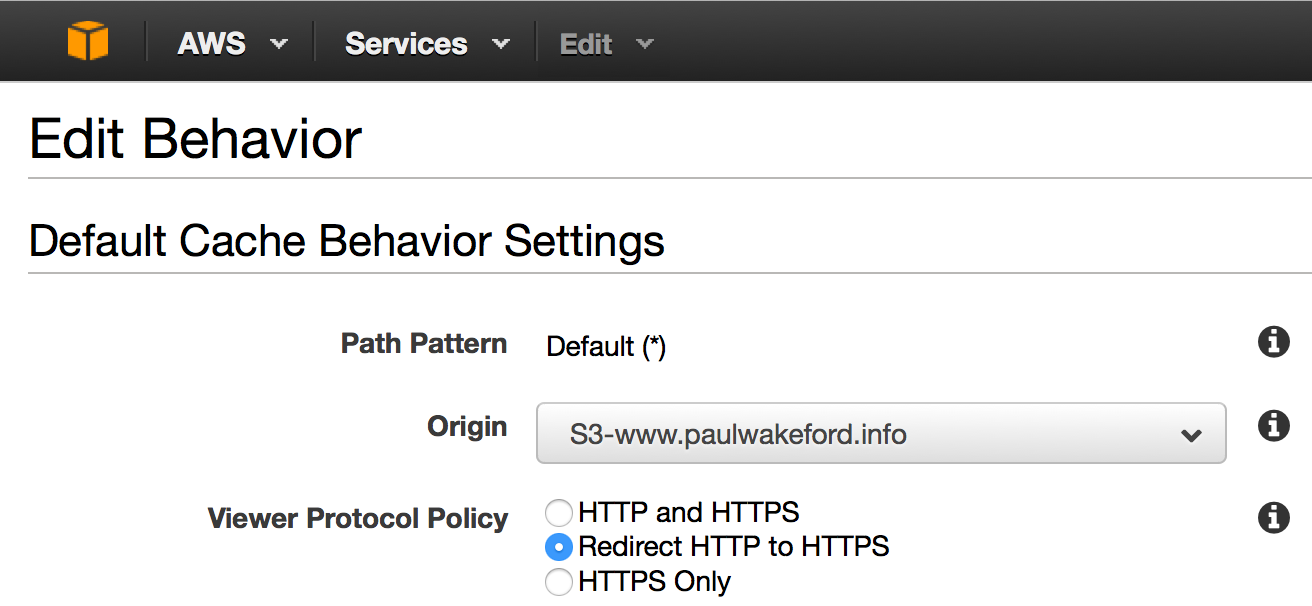

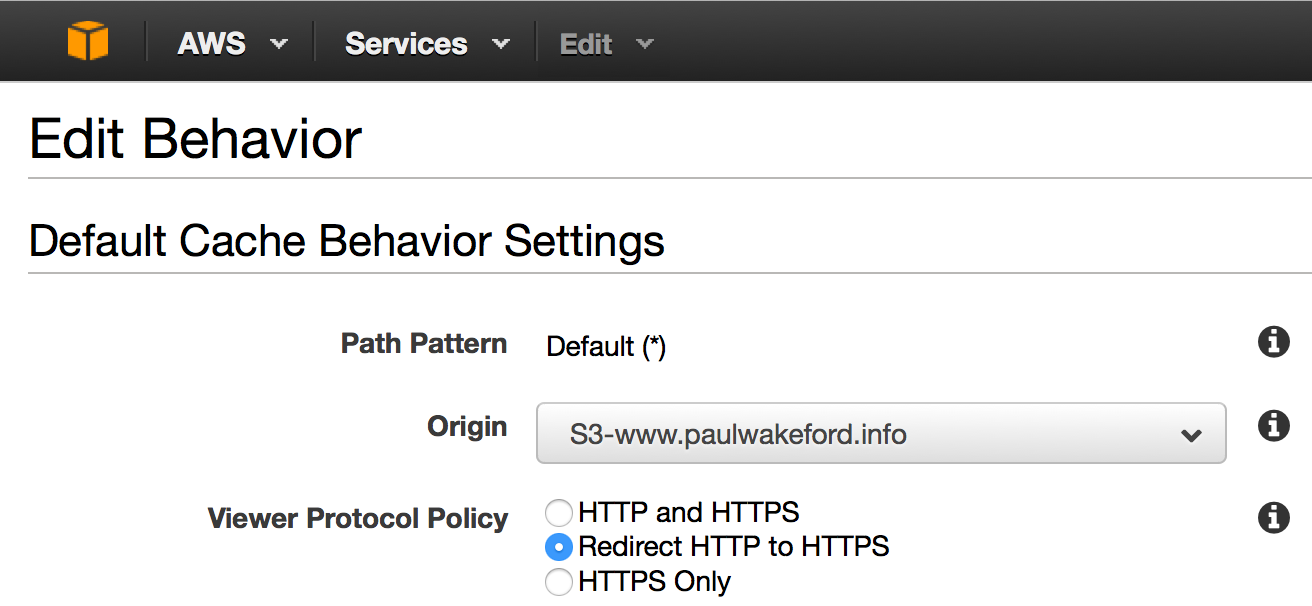

You also probably want to enforce SSL using redirection. This means users will be automatically redirected to the SSL version of your site.  .

.

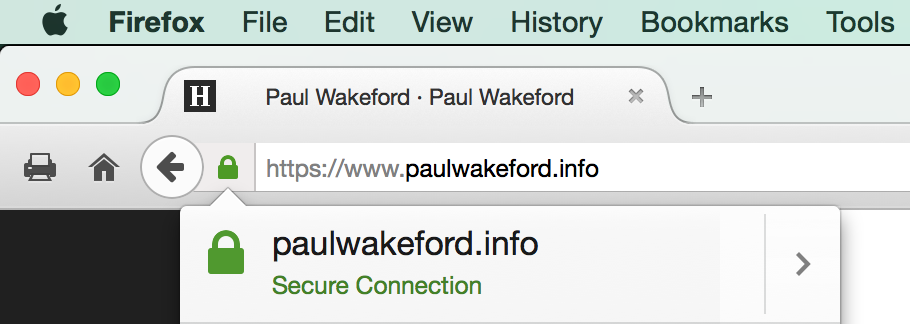

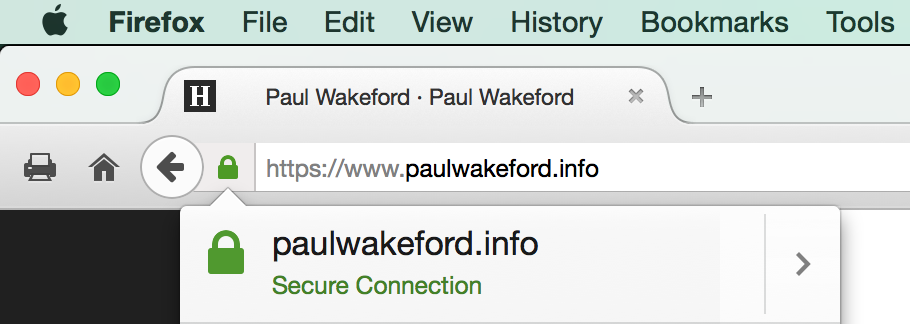

Great success!

One final wrinkle. Let’s Encrypt certificates are valid for 90 days only — this is a deliberate security mechanism. This means you need to have an automated process in place to renew your certs before they expire — they recommend every 60 days. However they do not yet have that system in place (remember this is still a beta) so certificates currently have to be manually renewed.

Mon, Nov 23, 2015

UPDATE 29/04/2016: I fixed this issue - see here.

I picked up an AWS IoT button at re:Invent this year. I didn’t really know what I would do with it and it sat in a drawer for a few weeks waiting for an idea to appear. Last week, one finally did.

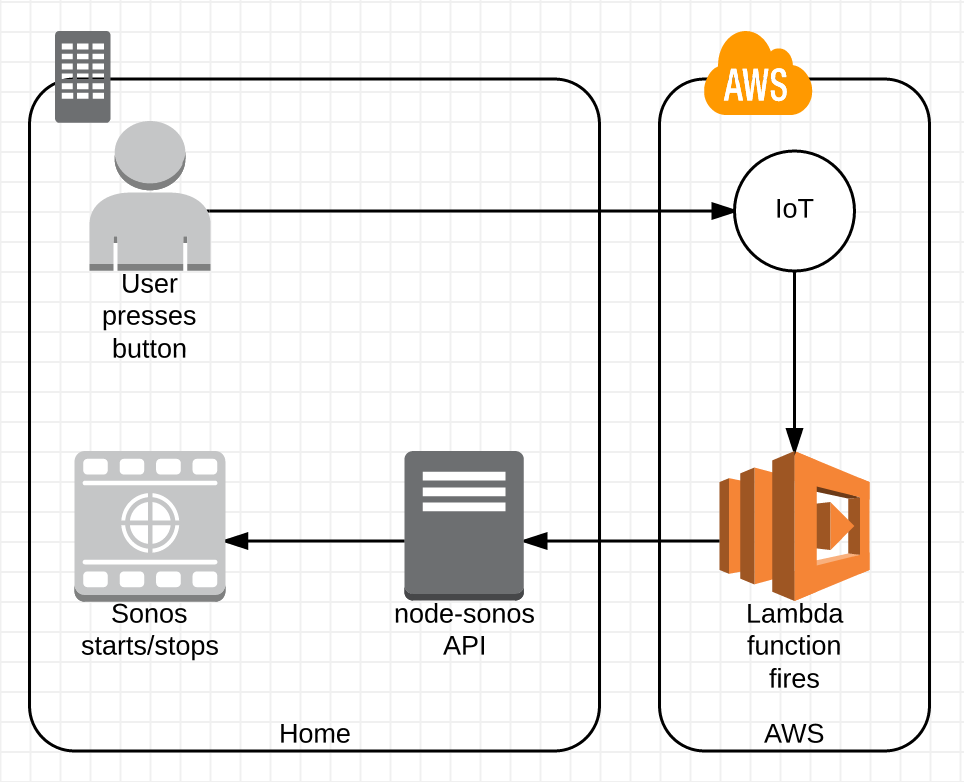

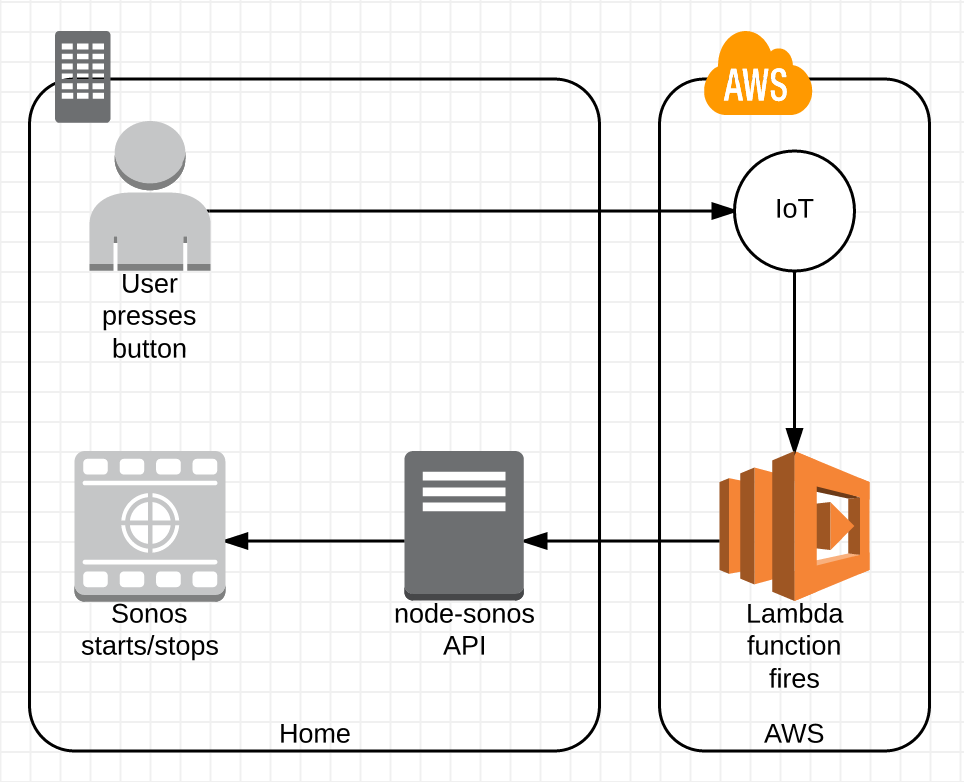

At home we have a Sonos system for multi-room audio. My wife’s main use case is to listen to triplej in the kitchen/diner and bedroom areas in the morning before she goes to work or uni. We have a few ways to control the Sonos system, each with pros and cons:

the Sonos iOS app, which works OK and is our main control method. However it means pulling out a phone or tablet, unlocking it, tapping Rooms, tapping ‘Sonos Favorites’, tapping triplej, setting the volume to the right level.. then doing much the same when leaving the house.

integration with our Amazon Echo. I use the echo-sonos software which generally works OK but my wife is less happy using the Echo to do this, and turning the Sonos off works less well - if there is voice coming from the speakers (e.g. news, links etc) then the Echo struggles to pick up the voice commands. The integration is also slightly awkward because of limitations in the Echo SDK (Alexa Skills Kit) which mean you have to ‘tell Sonos to play triplej’ and ‘tell Sonos to stop’.

pressing the button on the top of the speakers - works for turning off the system but not for turning on, and we’re starting to mount the speakers off the floor so in the long term this option will go away.

So how about using the button? Press once to start triplej. Press again to stop playback. Sounds achievable.

Steps:

Link the AWS IoT button to the IoT service. The wizard does a decent job of registering your ‘Thing’ (button) and creating a default linked certificate (to authenticate your button), a policy (granting AWS access for the button) and a rule (what happens when the button is pressed).

Write Lambda code. In pseudo-code we’re just doing

get current Sonos playing state

if not playing

start triplej

else

pause playback

node.js is the language most of the Sonos and Echo integrations use. In particular I use the node-sonos-http-api project to expose an API for Sonos play/pause/group/volume functions. I set this up a while back to support use of the echo-sonos project, including an external endpoint running on a server in my internal network, so Lambda has an endpoint to talk to. My code is here, if you use it you should

- add more error checking

- fill in your own server IP or DNS name and port that is used for external access to your node-sonos API server

- set up your own presets within node-sonos

Set the IoT rule to trigger your Lambda function on button press.

So we end up with something that looks like this:

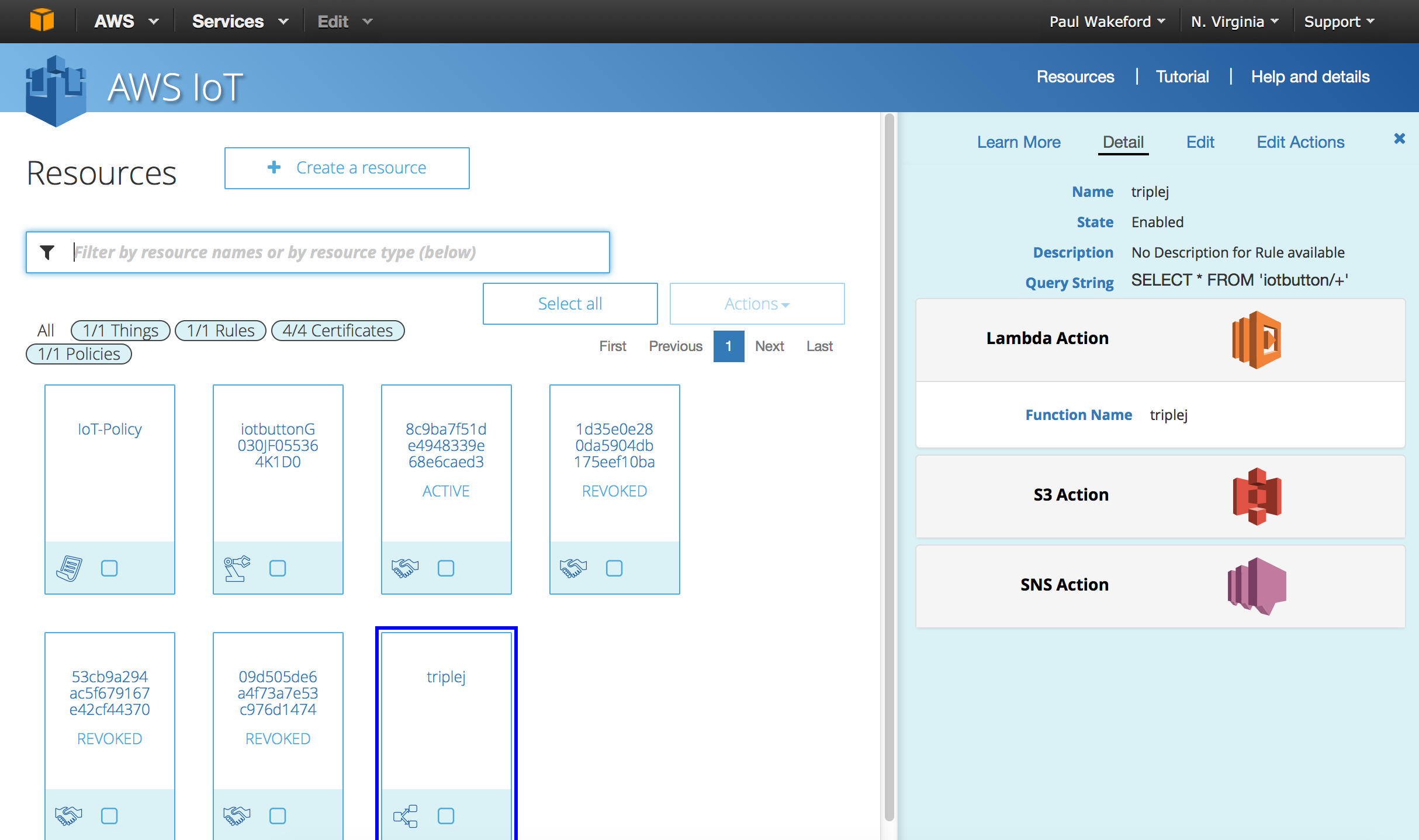

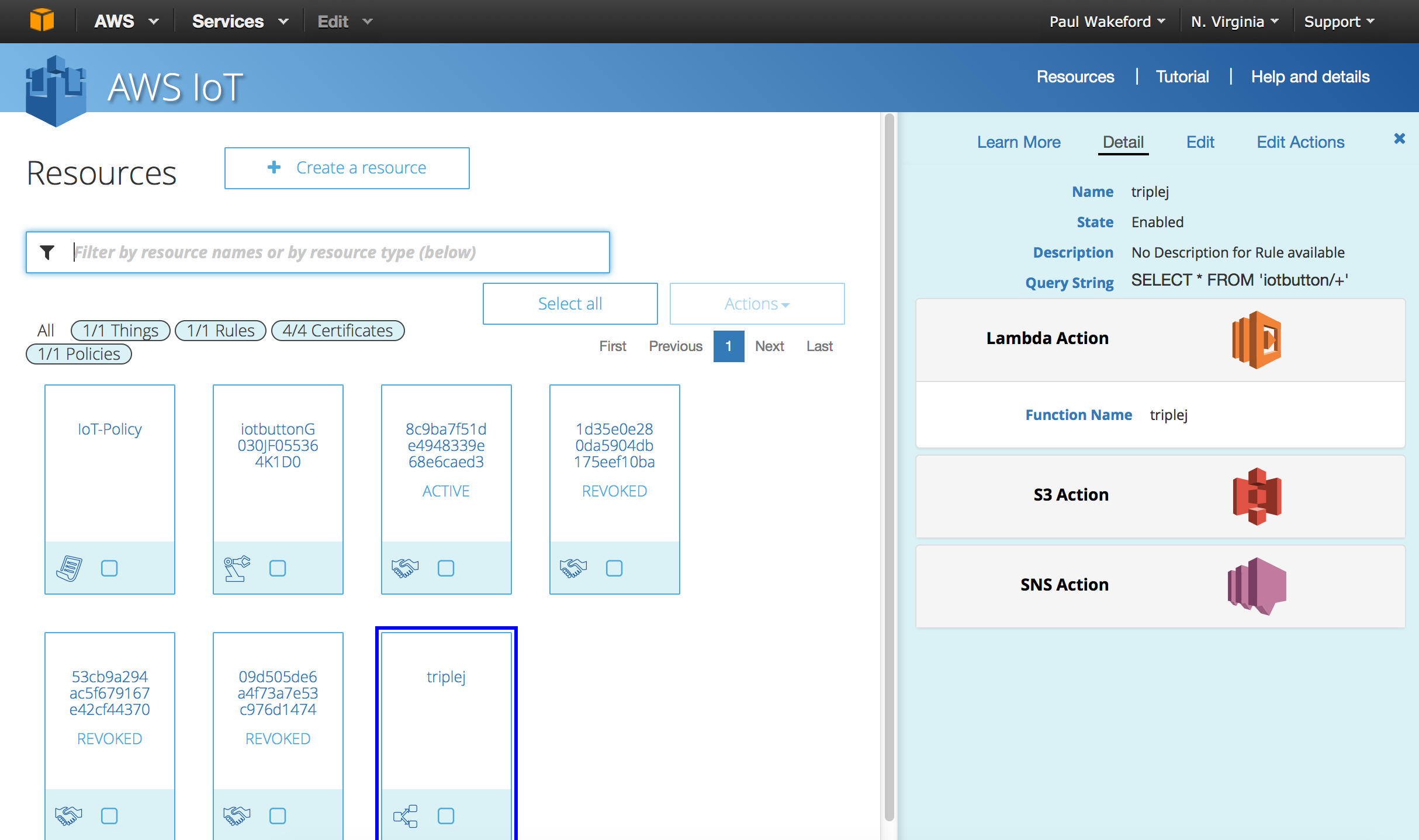

The revoked certificates are from testing (there appears to be no way to delete a certificate — so the console will get messy) and we will come to the reason for the S3 and SNS actions..

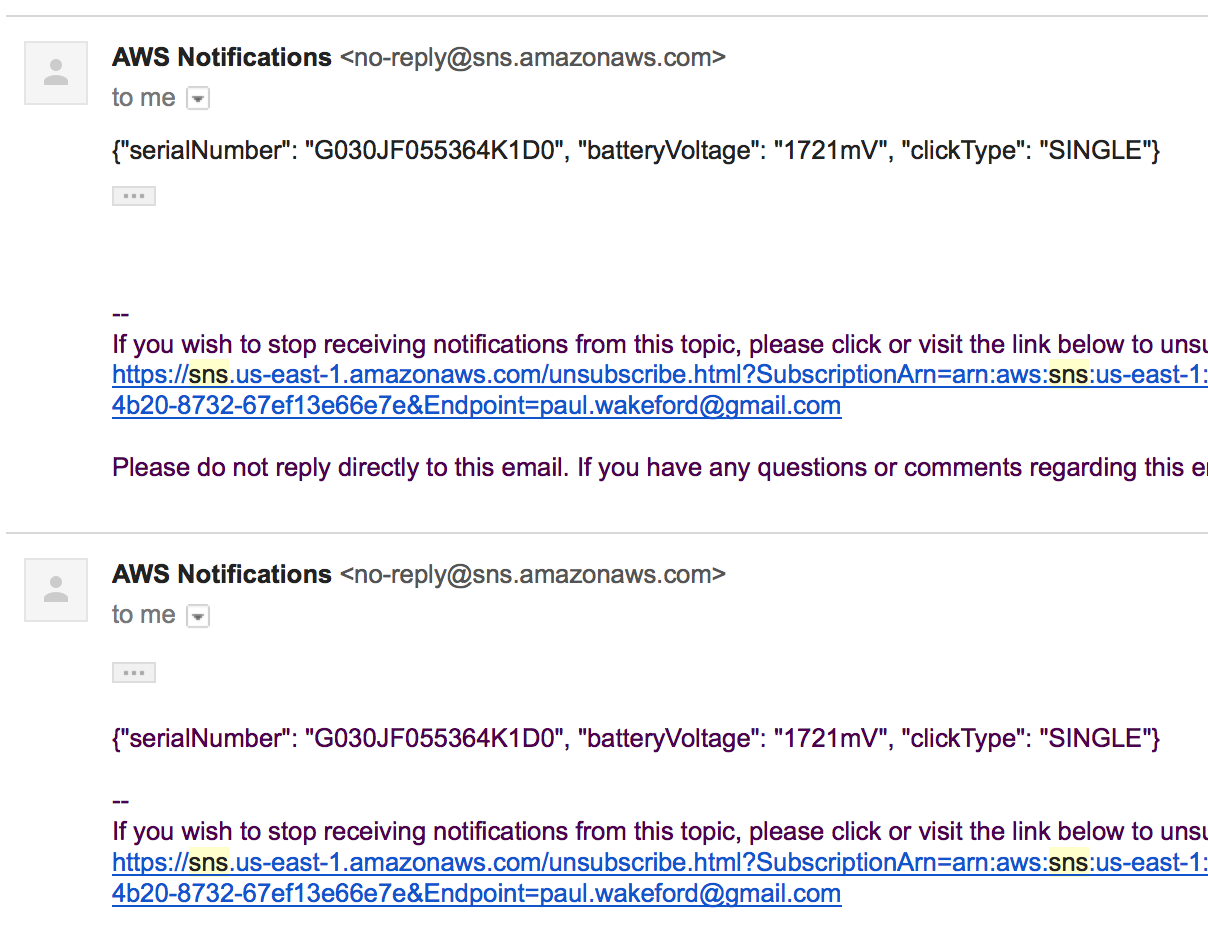

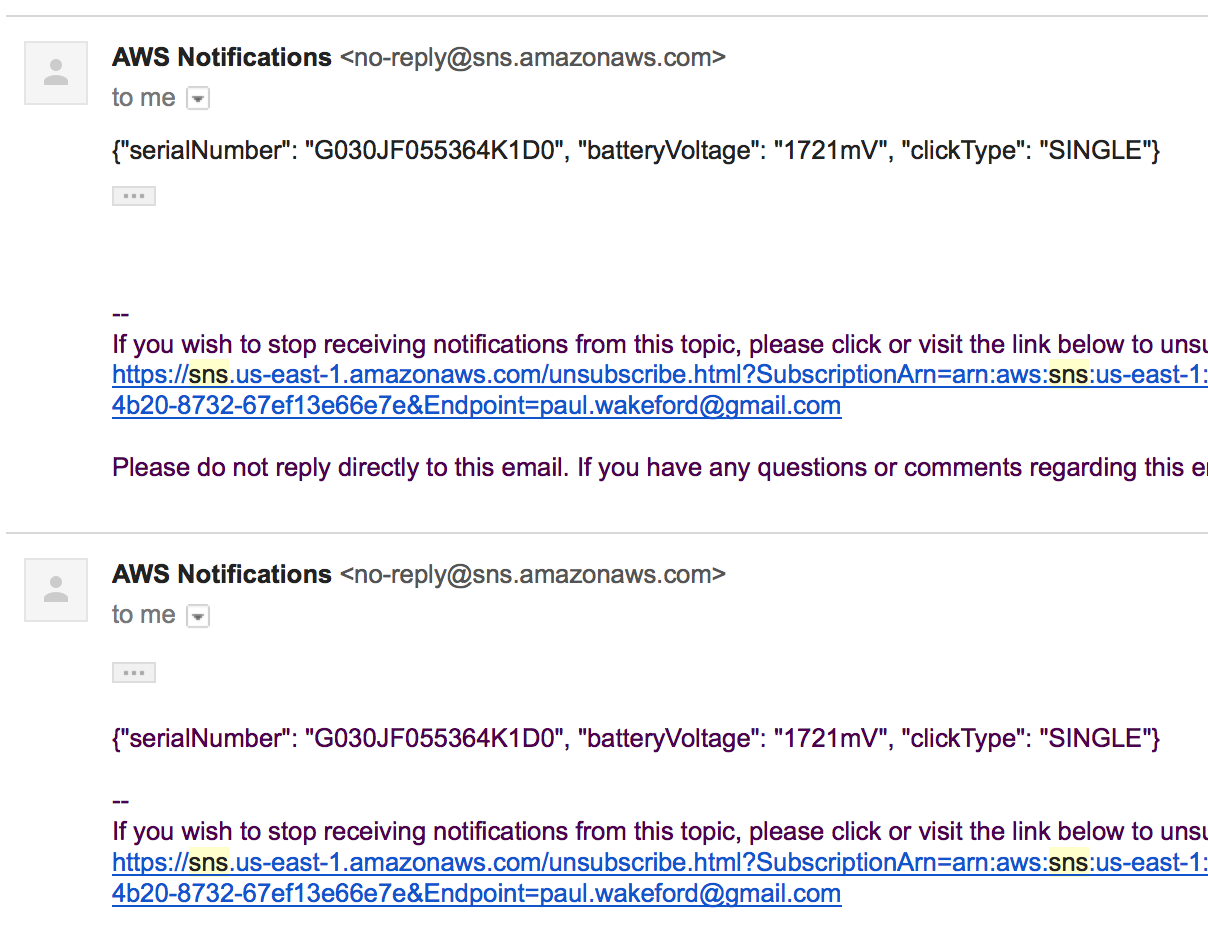

I tried testing from a starting state of silence and… yes, triplej plays. Then stops. Then plays again. Try again. It starts… and stops. Odd. Checking the Lambda logs in CloudWatch Logs shows the function is running multiple times. As I’m not an experienced node.js or Lambda developer this led me down the rabbit hole of ‘why is my code looping?’. After a while I suspected that the IoT button was triggering multiple invocations of my Lambda function but as the IoT service doesn’t directly support logging I needed another way to prove this — which is where the S3 and SNS rule actions come in. I set things up to log to S3 and to an SNS topic and then to email when the button was pressed, pressed the button once and got this:

One click, two notifications. It looks like there is an issue with my IoT button. I’ve contacted AWS to see if this is a known issue and if I can get a replacement, but given these were given away at a convention in the U.S. it’s unlikely they have spares or would be willing to ship one to me in Australia.

Back in the drawer with you, button. Not every project can be a success.

Sun, Nov 22, 2015

I have a combination of first world problems

- My house does not have wired ethernet

- I use a video player (a Raspberry Pi 2 running OpenElec Kodi) that does not have native support for many good wifi adapters, particularly 5Ghz ones

- All my content is on a NAS

This would be a good place to use ethernet over power (EoP) adapters, and I have done this for a while with mixed success. I have NetComm NP504 adapters and they are generally OK with 720p content but I have noticed they struggle streaming high bitrate 1080p, and performance generally is variable - a file that will play one day will stutter and pause the next.

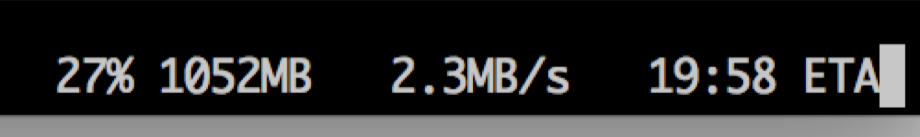

A quick check showed that 500Mbps EoP adapters are capable of around 10MB/sec. Unfortunately not for me:

.

.

This is SCPing a file from my NAS directly to a ext4 formatted USB thumb drive attached to the Pi, over the EoP adapters (directly plugged into the wall outlet - there are known issues plugging these adapters into extension cords).

It turns out that if your power outlets are split between different circuits your speed is badly reduced. So I can add to my list:

- My outlets do not support fast EoP.

Hard wired ethernet is on my list of jobs but access is very difficult to the ceiling where I need to drop the cables.

Fri, Nov 20, 2015

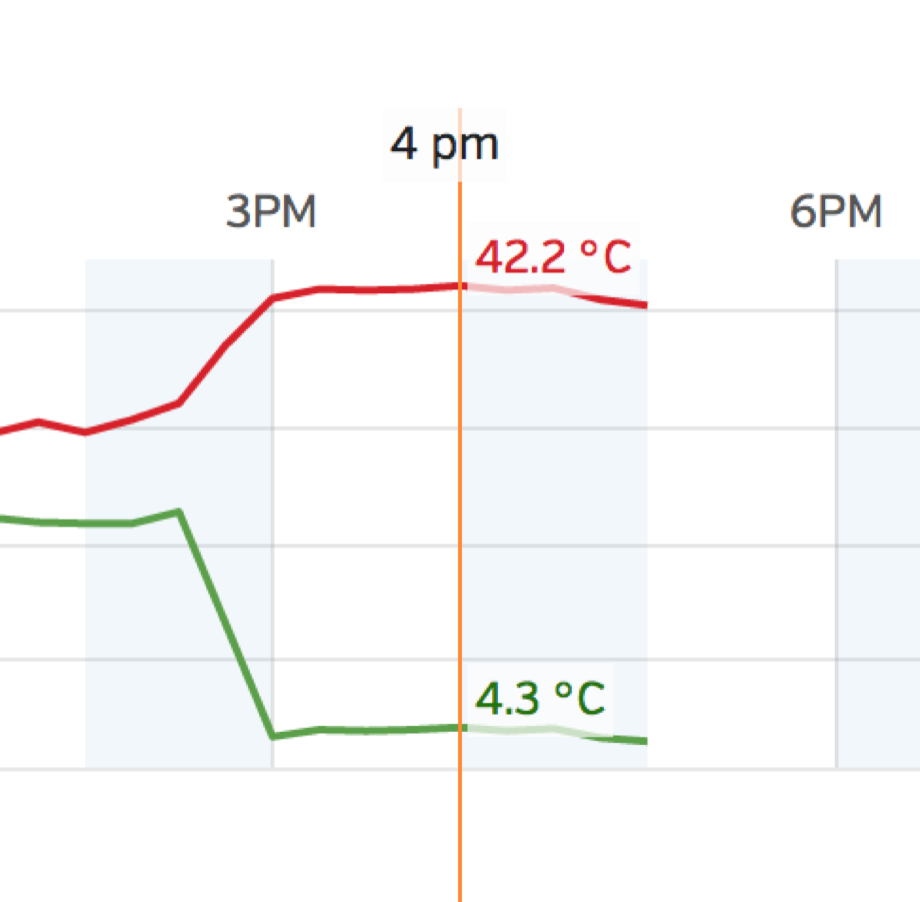

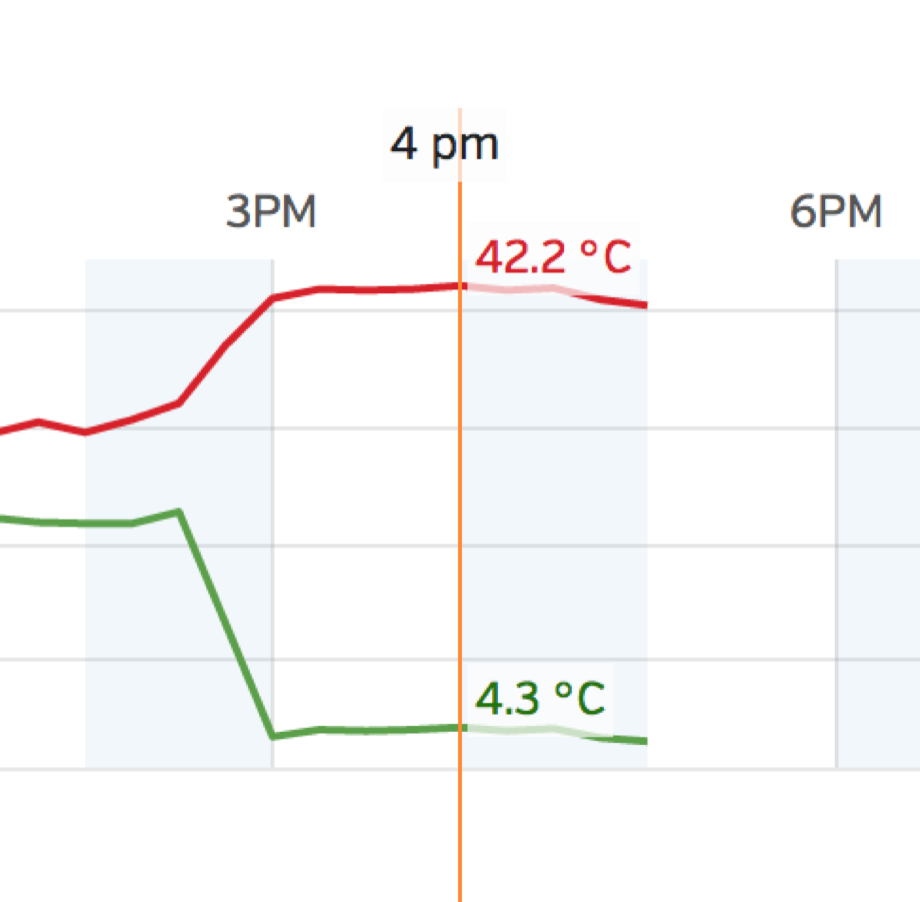

This is from the weather station on my roof on 20th November 2015:

Red is the temperature, green is dew point.

The weather station is online and pushes data to Weather Underground. I’ll detail that setup in a future post.

Fri, Nov 20, 2015

I have a Sonos PLAYBAR which doesn’t support DTS - it maxes out at DD5.1. For DTS files it downmixes to 2 channel which the PLAYBAR has a decent go at making ‘surround’ but.. it’s just not the same. Handily, ffmpeg (which I have installed via Homebrew - I use OSX) can do this conversion easily:

ffmpeg -i infile.mkv -vcodec copy -acodec ac3 -ac 6 outfile.mkv

Given I have a directory of files to convert, I went looking for something that would do a batch conversion and while there are a few OSX GUI tools out there I thought it would be easier to borrow someone else’s shell script and I found this one, written to use Handbrake but easily converted to use ffmpeg. I also amended it to rename the old file without the .mkv extension and name the new file ,dd5.mkv so the new version will be picked up by Kodi when it next does a library scan. My version is up as a GitHub Gist - you will need to modify paths to suit your system and there is minimal error checking.

3. Find the Marketplace AMI which you want to use. For this example I am using the AMI “Cisco Cloud Services Router (CSR) 1000V - Bring Your Own License (BYOL)”. Some AMIs include a fee for running the software on top of the fee for running the EC2 instance, others are Bring Your Own License (BYOL) meaning you have to own or purchase a license separately. Select your region, review the costs and click Continue.

3. Find the Marketplace AMI which you want to use. For this example I am using the AMI “Cisco Cloud Services Router (CSR) 1000V - Bring Your Own License (BYOL)”. Some AMIs include a fee for running the software on top of the fee for running the EC2 instance, others are Bring Your Own License (BYOL) meaning you have to own or purchase a license separately. Select your region, review the costs and click Continue. 4. You have to ‘subscribe’ to access the AMI. This means accepting the terms and conditions for running the vendor software (and means that AWS can notify you if there is an issue with the AMI software, such as a security update). You can see that the ‘Launch with EC2 console’ links are inactive meaning you must accept the terms to continue. Click the ‘Accept Terms’ button.

4. You have to ‘subscribe’ to access the AMI. This means accepting the terms and conditions for running the vendor software (and means that AWS can notify you if there is an issue with the AMI software, such as a security update). You can see that the ‘Launch with EC2 console’ links are inactive meaning you must accept the terms to continue. Click the ‘Accept Terms’ button. 5. You will be told to refresh the page. Do that. The ‘Launch with EC2 console’ links will now be active. Click the link next to the region in which you want to launch the instance - I chose the Sydney region.

5. You will be told to refresh the page. Do that. The ‘Launch with EC2 console’ links will now be active. Click the link next to the region in which you want to launch the instance - I chose the Sydney region. 6. You can now continue with the normal EC2 launch wizard and this time the ‘Request Spot instances’ check box is available.

6. You can now continue with the normal EC2 launch wizard and this time the ‘Request Spot instances’ check box is available.

Hot cross buns on display at my local supermarket - January 3rd. Relentless.

Hot cross buns on display at my local supermarket - January 3rd. Relentless.

Yes, it is an overall upward trend as we add more projects to AWS but you can clearly see the effect of turning off resources (particularly clear are the dips at weekends).

Yes, it is an overall upward trend as we add more projects to AWS but you can clearly see the effect of turning off resources (particularly clear are the dips at weekends).

If you are running Let’s Encrypt on a supported web server (Apache etc) I’m guessing it will create this file and contents for you.

If you are running Let’s Encrypt on a supported web server (Apache etc) I’m guessing it will create this file and contents for you. .. however also, a success message:

.. however also, a success message:

.

.

.

.